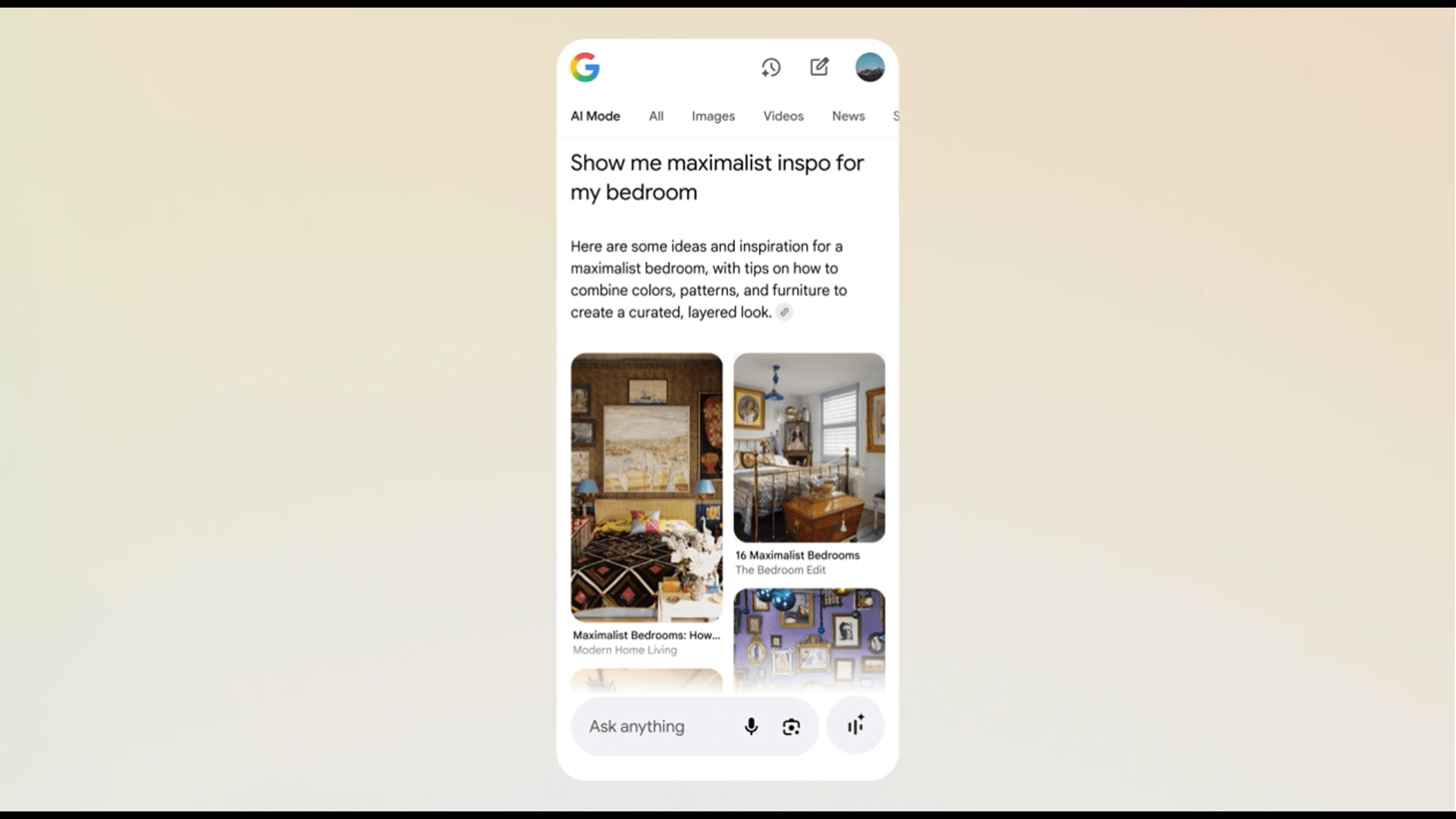

Google has added a whole new dimension to its IA search mode propelled by the Gemini with the addition of images to the text and the links provided by the conversational research platform.

The AI mode now offers image search elements and Google lens to support the Gemini AI engine, allowing you to ask a question about a photo you download, or see images related to your questions.

For example, you can see someone on the street with an aesthetic you like, take an image and ask IA mode to “show this style in lighter shades”. Or you Oculd ask for “retro lounge conceptions from the 1950s” and see what people were sitting and about 75 years ago. Google launches this function as a means of replacing the clumsy filters and keywords with a natural conversation.

The visual facet of the AI mode uses an “Visual Search Fan – Out” approach in addition to its existing fan -out way of answering the questions used by AI mode.

When you download or point an image, the AI mode decomposes into elements such as objects, background, color and texture, and sends several internal requests in parallel. In this way, he returns with relevant images that are not limited to repeat what you have already shared. Then he recombies the results that best match your intention.

Of course, this also means that Google’s search engine must decide on the results recovered by the AI to highlight and when deleting the noise. It can misinterpret your intention, raise sponsored products or promote major brands whose images are better optimized for AI. As research becomes more focused on image, sites that lack clean visuals or visual metadata can disappear from the results, which makes experience less useful than ever.

As for shopping, all of this accumulates in Google’s shopping graph, which indexes more than 50 billion products and refreshes every hour. Thus, a photo of a pair of jeans could bring you the details of the current local prices, opinions and availability at the same time.

The AI mode transforming your guests and visuals vague into real options to shop, learning or even discovering art is a big problem, at least if it works well. The size of Google allows it to melt research, image processing and electronic commerce in a single flow.

There will be many worried competitors who look closely, even if they were ahead of Google with similar products. For example, the Pinterest lens already allows you to find similar looks from images, and the visual search for Microsoft and the visual bing search allow you to start from images in one way or another.

But few combine a global product database and live price data with conversational research via images. Specialized applications or niche fields could go ahead, but the size and omnipresence of Google mean that it will have a massive step ahead with wider attempts to search for information with images.

For years, we have typed requests and analyzed the results. Now, the online research management is to detect, point out and describe, allowing the engines of AI and the search to interpret not only our words, but also what we see and feel.

A more aesthetic thought and conception means that the systems which natively understand the visuals become more and more critical, and the evolution of the AI suggests that the basic line could soon be that the research tools should see and read.

If missteps slip, however, all bets are disabled. If the visual results of the mode AI misinterpret a misinterpreted interpretation, mislead users or display major biases, users can return to brute force filtering or more specialized options. The success of this visual AI jump is based if it feels useful or unreliable.