- Planned software update adds external accelerator role to Nvidia’s DGX Spark

- MacBook Pro users can transfer heavy AI processing to the external system

- Performance and tooling changes focus on local open source AI

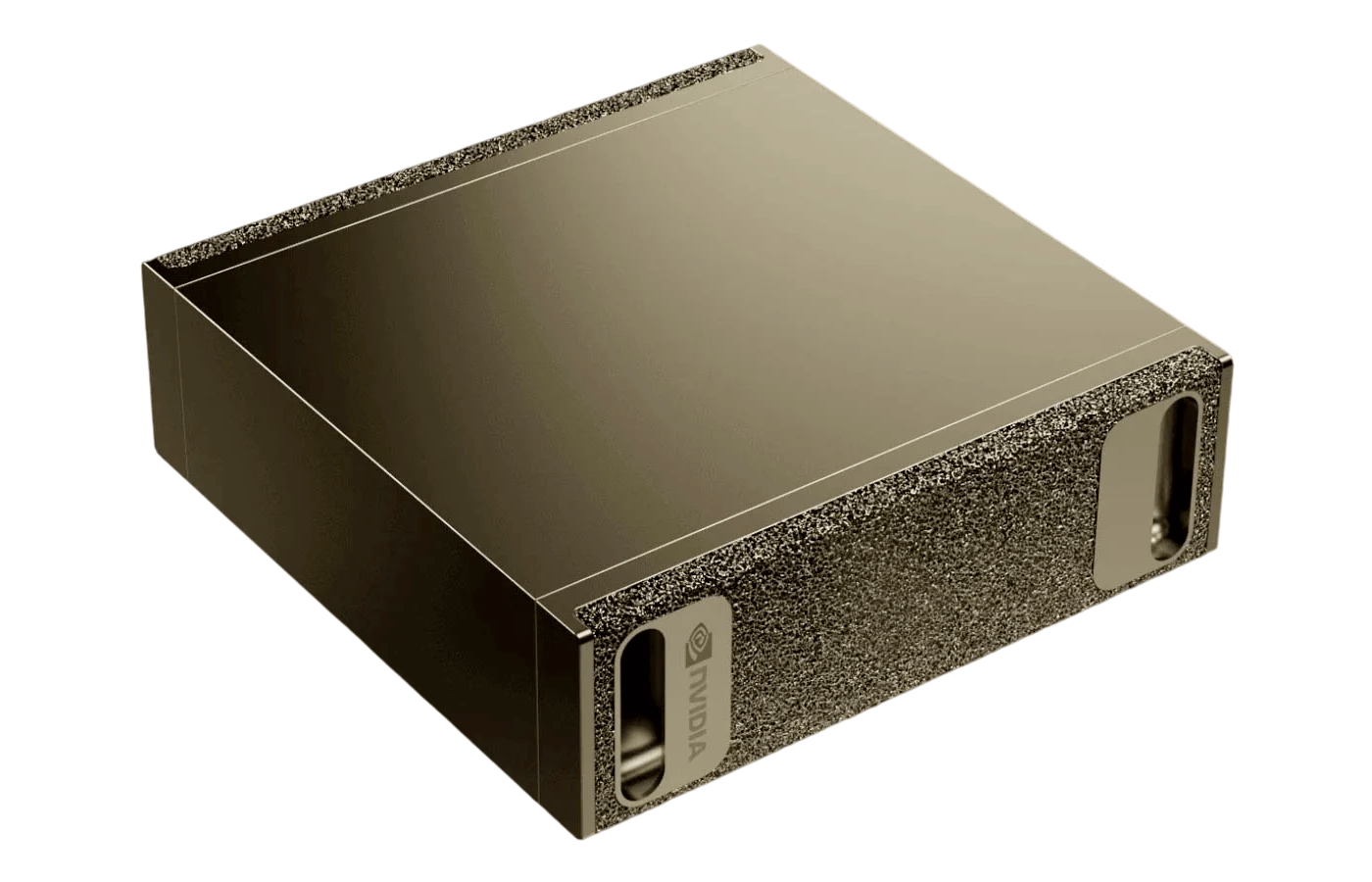

At CES 2026, Nvidia revealed that it is planning a software update for DGX Spark that will significantly expand the device’s capabilities.

Powered by the GB10 Grace Blackwell superchip, Nvidia’s little powerhouse combines CPU and GPU cores with 128GB of unified memory, allowing users to load and run large language models locally without relying on cloud infrastructure.

Early reviews of the Spark, while universally positive, highlighted the software’s limitations. This is a problem that Nvidia is looking to solve. A key part of the change will be expanded support for open source AI frameworks and models.

Good news for MacBook Pro users

This will be a software-only change, with no new hardware – and for organizations that rely on open tools, the changes will reduce custom configuration work and help keep systems running in the same way as models and frameworks evolve.

The mini PC update will add support for tools like PyTorch, vLLM, SGLang, llama.cpp, and LlamaIndex, as well as models from Qwen, Meta, Stability, and Wan.

Nvidia says users can expect up to 2.5x performance gains over Spark at launch, primarily due to updates to TensorRT-LLM, tighter quantization, and decoding improvements.

One example shared by Nvidia involved Qwen-235B, which more than doubles throughput when switching from FP8 to NVFP4 with speculative decoding. Other workloads, including Qwen3-30B and Stable Diffusion 3.5 Large, would show smaller gains.

The update also introduces DGX Spark playbooks that bundle tools, templates, and configuration guides into reusable workflows. These are designed to work on-premises without rebuilding entire environments.

An interesting demo paired a MacBook Pro with DGX Spark for AI video generation. Nvidia showed a 4K pipeline that took eight minutes on the laptop and about a minute when heavy calculation steps were transferred to the Spark.

The approach keeps the authoring tools on the laptop while Spark handles the heavy processing, bringing AI video work closer to interactive use instead of lengthy batch runs.

DGX Spark can also serve as a background processor for 3D workflows, generating assets while creatives continue to work on their main systems.

A local Nsight Copilot is included, enabling CUDA support without sending code or data to the cloud.

Overall, the planned update will move DGX Spark from a standalone development system to a flexible on-premises AI node capable of supporting laptops, desktops, and edge deployments.

Via Storage Review

TechRadar will cover this year’s events extensively THESEand will bring you all the big announcements as they happen. Visit our CES 2026 News for the latest stories and our hands-on verdicts on everything from wireless TVs and foldable displays to new phones, laptops, smart home gadgets and the latest in AI. You can also ask us a question about the show in our Live Q&A from CES 2026 and we will do our best to answer them.

And don’t forget to follow us on TikTok And WhatsApp for the latest news from the CES show!