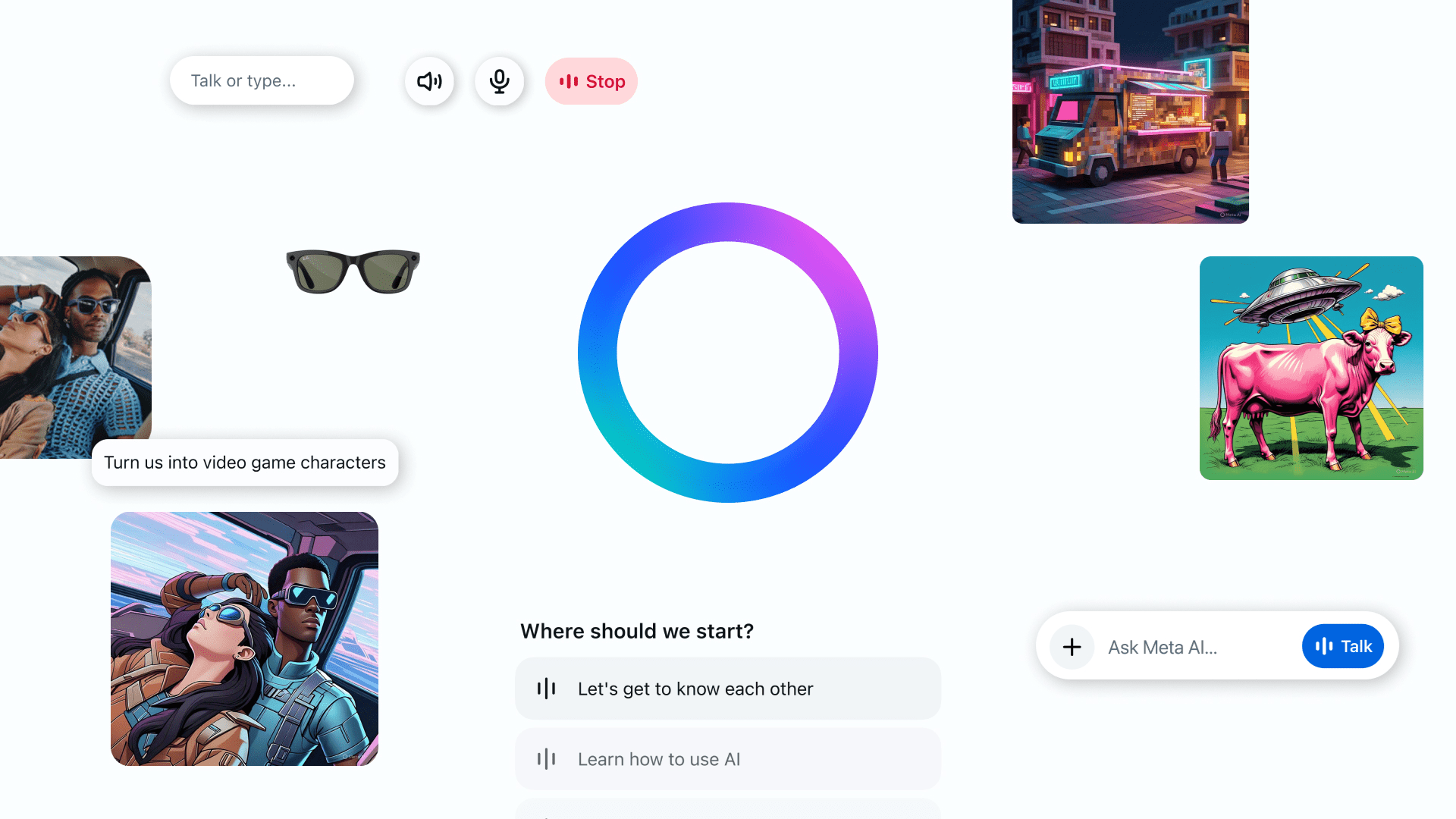

- Meta launched a new autonomous application for its assistant Meta Ai, powered by Llama 4

- The application connects to Meta platforms and devices, including Ray-Ban Meta smart glasses

- The Meta I personalized its behavior according to your Instagram and Facebook activity

Meta AI moves in its own space with the launch of a new autonomous application. Fucked by the new AI Llama 4 model of Meta, the new application is simultaneously an autonomous product and a replacement of Meta View, which was previously used to connect to the Ray-Ban Meta intelligent glasses.

Meta is making a big game here, positioning vocal interactions as the most intuitive and natural means of interacting with your AI. The application supports cats hands and even includes a full discourse demo, a feature that allows you to speak and listen at the same time.

It is very useful given how Meta is lively to connect Meta AI to the largest portfolio of company products, especially the Ray-Ban Meta smart glasses. These AI compatible shows will now work via the Meta AI application, replacing the Meta View application on which they currently count.

This means that you can start a conversation on a platform and easily switch to another. All you have to do is open the appliances tab to the application and reproduce your settings and the recorded information.

Ask a question via your smart glasses, get a Meta AI answer, then take the same wire on your phone or desk later. You can go from vocal chat to your glasses reading the conversation in the historic tab of your application. For example, you could walk around and ask Meta Ai through your glasses to find a bookstore nearby. The answer will be recorded in your Meta AI application for a subsequent revision.

The other major element of the Meta AI application is the discovery flow. You can see things shared publicly as successful quick ideas and images they have generated on the flow, then remix them for your own ends.

In addition, Meta AI’s office version is also reworked with a new interface and more image generation options. There is also an experimental document editor for composition and text editing, adding visuals and exporting it to PDF.

Meta spent several months broadcast Meta AI on Instagram, Facebook, Messenger and Whatsapp, but now, this is the first time that Meta Ai has not been accommodated in another mobile application.

The connection of AI to other Meta applications gives it an advantage (or a defect, depending on your sight) by allowing it to adapt its behavior according to what you do on these other applications. Meta Ia relies on your Instagram and Facebook activity to personalize her answers.

Ask him where to dine, and it might suggest a ramen place that your friend published last week. Ask for advice on upcoming holidays, and that will remember that you have published once you like “traveling the light but emotionally over-pack” and suggest a route that could correspond to this attitude.

Meta clearly wants Meta ai to be central in all your digital activities. The way the company launches the application, it seems that you will always check it, whether on your phone or your head.

There are obvious parallels with the Chatgpt application in terms of style. But Meta seems to want to differentiate its application from the creation of Openai by emphasizing staff on the wider utility of an AI assistant.

And if there is one thing that Meta has more than almost anyone, they are personal data. Meta Ai is drawing in your social data, your vocal habits and even your smart glasses to provide answers designed for you feels very on the brand.

The idea of Meta Ai forming a mental album of your life according to what you liked on Instagram or published on Facebook may not call on everyone, of course. But if you are worried, you can still put smart glasses and ask Meta IA help.