As we expected, WWDC 2025 – mainly the opening keynote – came and has passed without official update on Siri. Apple still works on the update infused by AI, which is essentially a much more friendly and usable virtual assistant. Techradar editor, launches Ulanoff, broke the details of what causes the delay after a conversation with Craig Federighi, here.

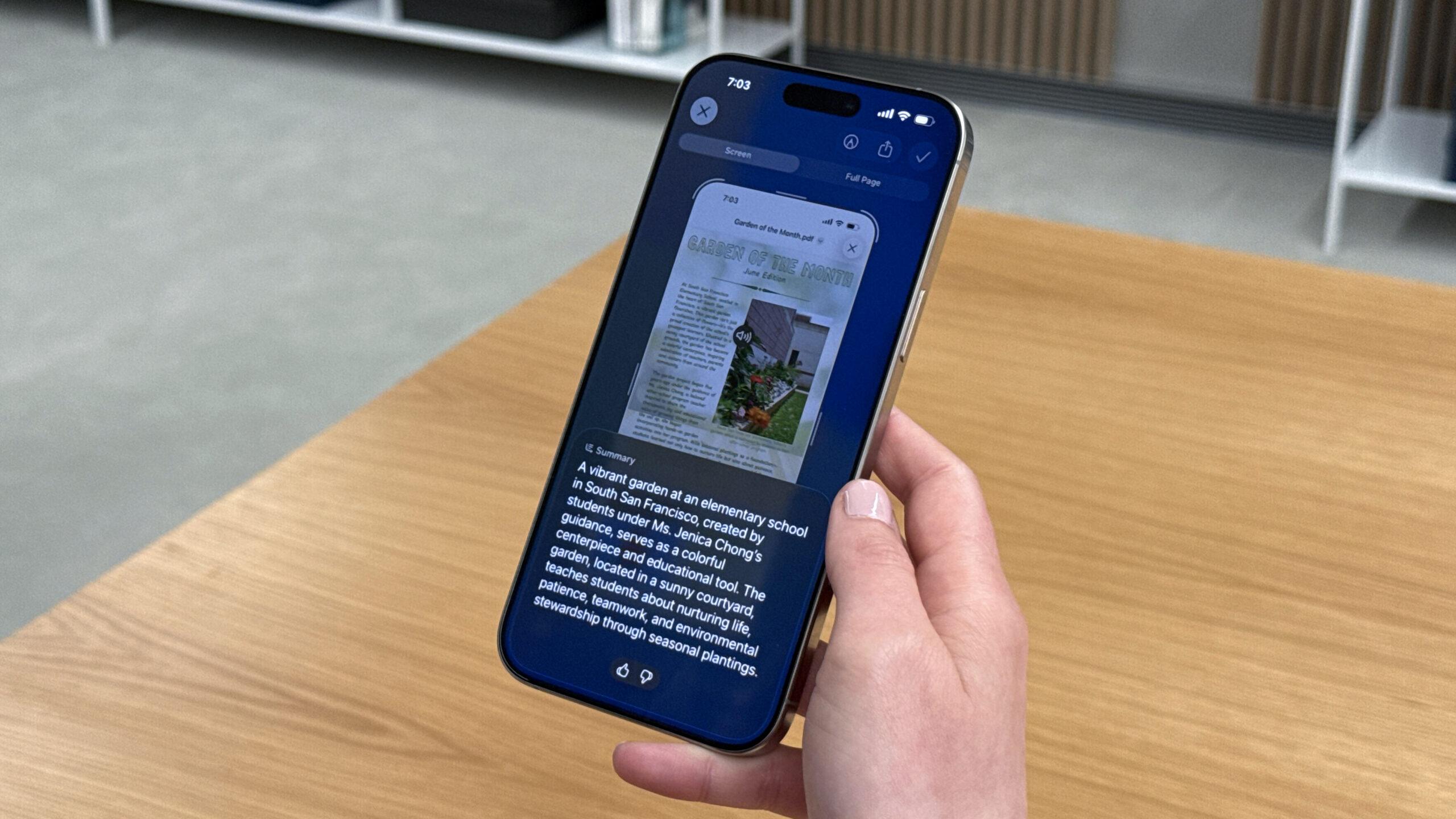

Now, even without the SIRI infused at AI, Apple has provided a fairly large upgrade to the Apple Intelligence, but it is not necessarily where you think. It gives visual intelligence – an exclusive functionality to the family iPhone 16, iPhone 15 Pro or iPhone 15 Pro Max – an upgrade during screen awareness and a new way of searching, all hosted in the power of a screenshot.

It is a complementary function of the original visual intelligence set Know how – a long press the camera control button (or personalizing the action button on the 15 pro) draws a live view of the camera of your iPhone and the possibility of taking a photo, as well as to “ask” or “search” what your iPhone sees.

It is a kind of more basic version of Google Lens, in the sense that you can visually identify plants, pets and research. Much of this will not change with iOS 26, but you can use visual intelligence for screenshots. After a brief demo at WWDC 2025, I can’t wait to use it again.

Visual Intelligence makes screenshots much more usable and could potentially save space on your iPhone … Especially if your photo application looks like mine and filled with screenshots. The great effort on the part of Apple here is that it gives us a taste of consciousness on the screen.

Screenshot with chat messages with a poster for a next cinema evening in the demo I saw revealed an overview of the new interface. This is the classic screenshot interface of the iPhone, but at the bottom left is the familiar “demand” and “research” is on the right, while in the middle is an Apple Intelligence suggestion which can vary according to any screenshot.

In this case, it was “adding to the calendar”, which allowed me to easily create an invitation with the name of the cinema evening on a good date and time, as well as the location. Essentially, it identifies the elements of the screenshot and extracts it from the relevant information.

Quite neat! Rather than just taking a screenshot of the image, you can have an exploitable event added to your calendar in a few seconds. It also cooks features that I think that many iPhone owners will appreciate – even if Android phones like the best pixels or the Galaxy S25 Ultra could have done it for a while.

Apple Intelligence will provide these suggestions when it judges them properly – it could be to create an invitation or a reminder, as well as the translation of other languages in your favorite, summarizing the text or even reading aloud.

All this is very practical, but let’s say that you scroll Tiktok or Instagram Reels and see a product – maybe a nice button or a poster that attracts your attention – Visual Intelligence has a solution for that, and it is a kind of Apple response to “ Circle To Search ” on Android.

You are a screenshot, then after being taken, simply rub the part of the image you want to search. It is a similar screen effect when you select an object to delete in the photos “clean”, but after that, it will allow you to search for it via Google or Chatgpt. Other applications can also opt for this API that Apple provides.

And this is where it becomes exciting enough – you can scroll through all the places available to search, like Etsy or Amazon. I think it will be a favorite of fans when he ships, but not entirely a reason to go out and buy an iPhone that supports visual intelligence … However, at least.

In addition, if you prefer to look for the whole screenshot, this is where the “asking” and “research” buttons come into play. With them, you can use Google or Chatgpt. Beyond the ability to analyze and suggest via screenshots, or search with a selection, Apple also widens the types of things that visual intelligence can recognize beyond pets and books to books, landmarks and works of art.

It was not all available immediately at launch, but Apple is clearly working to extend the capacities of visual intelligence and improve all the features of Apple Intelligence. Since this gives us an overview of consciousness on the screen, I am quite excited.