- Claude Chatbot of Anthropic now has a memory function on demand

- AI will not recall past cats only when a user specifically requires

- The functionality first takes place in Max, Team and Enterprise subscribers before extending to other plans

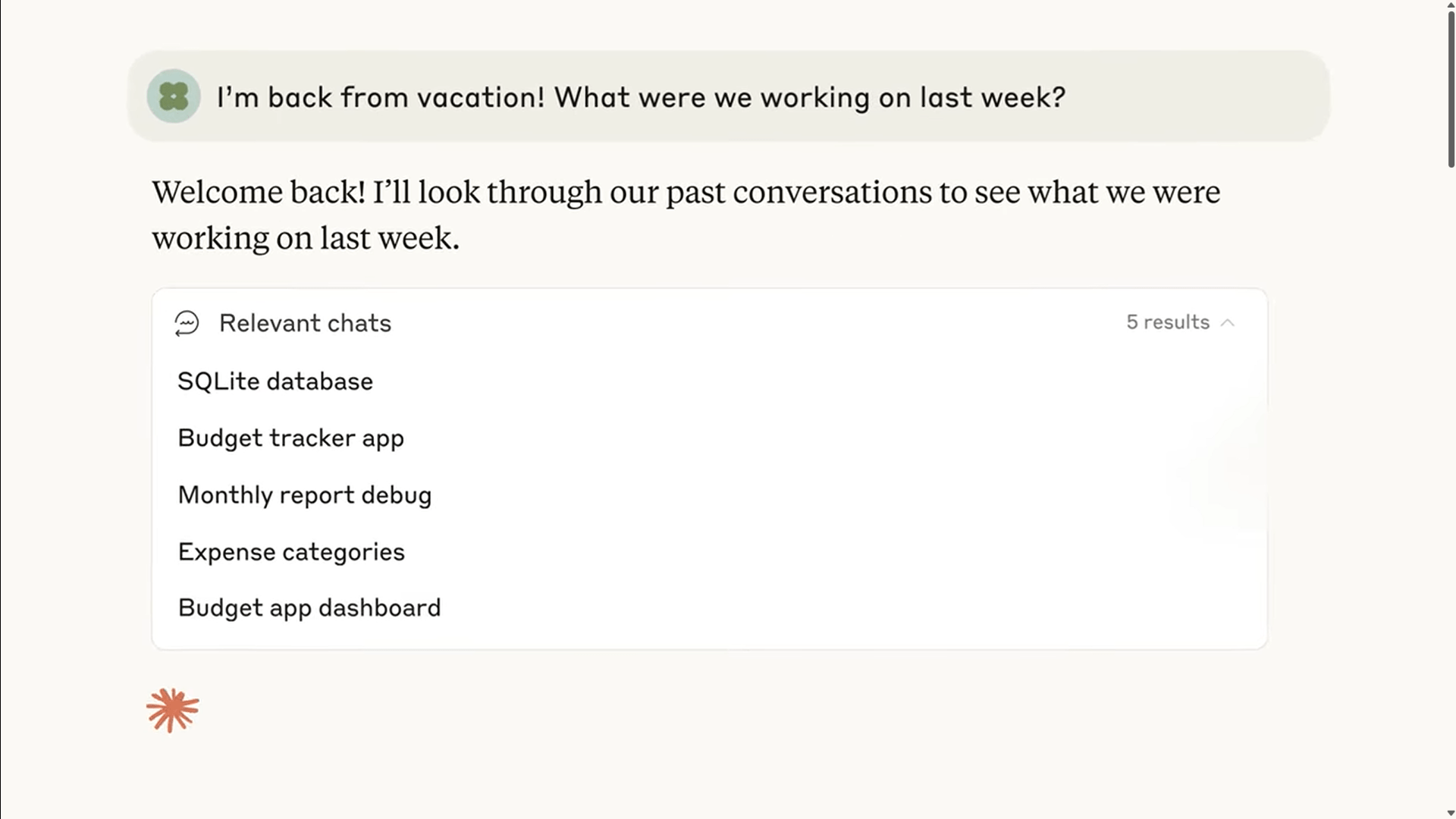

Anthropic has given Claude a memory upgrade, but it will only activate when you choose. The new feature allows Claude to recall past conversations, providing Chatbot with information to help continue the previous projects and apply what you have discussed before your next conversation.

The update arrives in the Max, Team and Enterprise of Claude subscribers, although it is probably more widely available at some point. If you have it, you can ask Claude to search for previous messages related to your workspace or your project.

However, unless you ask for it explicitly, Claude does not take a look back. This means that Claude will maintain a sort of default generic personality. It is for the good of privacy, according to Anthropic. Claude can recall your discussions if you wish, without slipping into your non -invited dialogue.

In comparison, the Openai chatgpt automatically stores past cats unless you get back and use them to shape its future responses. Google Gemini goes even further, using both your conversations with AI and your search history and Google account data, at least if you leave it. Claude’s approach does not pick up breadcrumbs referring to discussions earlier without you asking.

To watch

Claude remembers

Adding memory may not seem to be a big problem. However, you will feel the impact immediately if you have already tried to restart a project interrupted by days or weeks without useful, digital or other assistant. Making it an opt-in choice is a good touch in the fact that people are comfortable with AI today.

Many may want to help AI without abandoning control of chatbots that never forget. Claude goes around this tension proper by making memory something that you deliberately invoke.

But it is not magical. Given that Claude does not keep a personalized profile, he does not proactively remind you of preparing for the events mentioned in other cats or to anticipate the changes in style during writing to a colleague against a public commercial presentation, except invited to the inversion at mid-conferring.

In addition, if there are problems with this memory approach, the anthropic deployment strategy will allow the company to correct errors before it becomes widely available for all Claude users. It will also be worth seeing whether the creation of long -term context like Chatgpt and Gemini will be more attractive or off -putting for users in relation to the way of Claude to make memory an aspect at the request of the use of the AI chatbot.

And that assumes that it works perfectly. Recovery depends on Claude’s ability to surface the right extracts, not just the most recent or longest cat. If the summaries are vague or if the context is false, you could find yourself more confused than before. And although the friction of having to ask Claude to use his memory is supposed to be an advantage, it always means that you will have to remember that the functionality exists, which some can find boring. Even so, if the anthropic is right, a small border is a good thing, not a limitation. And users will be happy that Claude can remember, and nothing else, without demand.