Comino made the headlines with the launch of Grando, his workstation based on AMD cooled by water with eight GPU Nvidia RTX 5090. During the vast exchange of emails that I had with its CTO / Co-founder and Commercial director, I discovered that Grando was much more versatile than I expected.

Dig into its configurator and we will notice that you can configure the system with up to eight GPU RX 7900 XTX because, why not?

“Yes, we can wrap 8x 7900xtx, with an increased delay, however. In fact, we can wrap 8 GPU + Epyc in a single system, ”Alexey Chistov, the CTO of Comino, told me when I asked further.

Indeed, although it does not currently offer the promising arc GPU of Intel, it will do so if the market requires such solutions.

“We can design a block of water for any GPU, it takes about a month,” said Chistov, “but we do not opt for all possible GPUs, we choose specific models and brands. We only go for high -end GPUs to justify the additional price of liquid cooling, because if it could properly operate with air cooling – why bother? We try to stay with 1 or 2 different models per generation so as not to have several SKU (stock conservation units) of water blocks. You can have a RTX 4090, H200, L40S or any other GPU for which we have a water block in a single system if your workflow will benefit from such a combination. “”

HPC rimac

So how can Comino achieve such flexibility? The company presents itself as an engineering company with its slogan saying proudly “designed, not just assembled”. Consider Comino as the HPC rimac: obscurely powerful, agile, agile and expensive. Like Rimac, it focuses on the top of its activity and absolute performance section.

Its flagship product, Grando, is cooled by liquid and was designed to accommodate up to eight GPU from the start, which means that it will most likely be the test of time for several Nvidia generations; More about it in a little.

One of their main targets, Chistov, said to me: “It is always to adapt a single machine to PCI, this is how we can fill all the PCIe locations on the motherboard and install eight GPU GPU in a large server. The chassis is also designed by the Comino team, so everything works like “One”. This is how a triple slot GPU like RTX 5090 can be changed to adapt to a single slot machine.

In this spirit, it prepares a “solution capable of operating on the temperature of the cooling liquid of 50 ° C without strangling, so if you deposit the temperature of the coolant at 20 ° C and adjust the flow of coolant at 3 -4 l / m, the water block can remove approximately 1800 W from the heat of the 5090 chip with the temperature of the chip around 80-90c ”

That’s right, A single obstacle to the Comino GPU could eliminate 1800 W of heat from a single “hypothetical 5090” This could generate this quantity of heat if the temperature of the cooling liquid on the input is approximately 20 degrees Celsius and if the flow of coolant is not less than 3 to 4 liters per minute.

Pending eight of these “hypothetical GPUs” and some other components could lead to a total power power of the 15 kW system and indeed if such a full load system would have a constant coolant temperature of 20 ° C and a flow rate Water lock cooling liquid no less than 3-4 liters per minute, this system would work “normally”.

Who will need this kind of performance?

So what type of user splashes on multi-GPU systems. Chistov, once again. “There is no advantage in adding an additional 5090 if you are a player, that will not affect performance, because games cannot use several GPUs as they are used to using SLI or even DirectX to a given moment. There are several applications on which we focus on multi-GPU systems:

- AI inference: This is the most requested workload. In such a scenario, each GPU works “alone” and the reason to wrap more GPU per node is to reduce the “cost per gpu” during scaling: saving a rack space, spending less money for non -GPU equipment, etc. Each GPU in a system is used to process AI requests, mainly generative AI, for example, stable diffusion, Midjourney, Dall-E

- GPU rendering: popular workload, but does not always extend well by adding more GPU, for example octane and radiographs (~ 15% less performance by gpu @ 8-gpu) quite well, but the redshift does not not (~ 35-40% less performance by gpu @ 8-gpus)

- Science of life: Different types of scientific calculations, FOк Example Cryospark or Release.

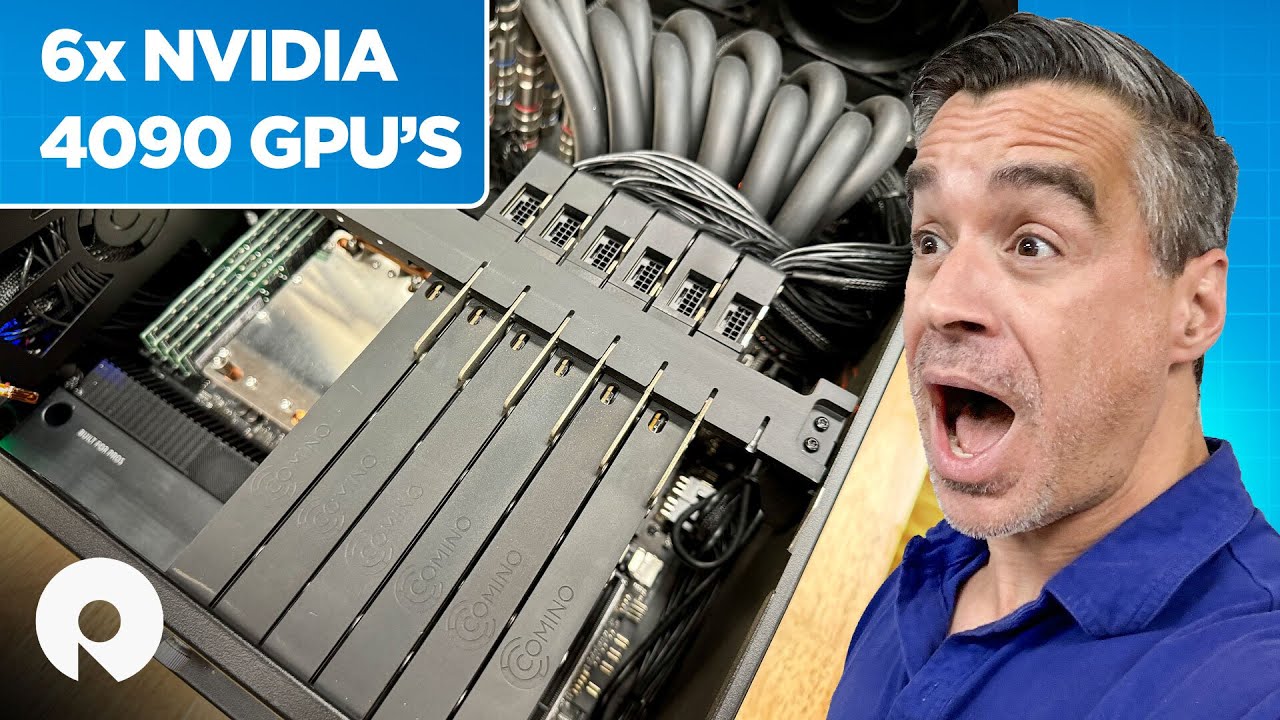

- Any workload linked to the GPU in a virtualized environment. Using hyper-V or other software, you can create several virtual machines to perform any task, for example, a remote workstation. As StorageREVIEW did with the GPPUs of Grando and six RTX 4090, he had on a review.

More specifically for the RTX 5090, the most important improvement for the workloads of the AI is the improvement of 50% of the memory capacity (up to 32 GB), which means that the new flagship product de Nvidia is better suited to inference because you can put a much more important AI model in memory. Then there is the much higher memory bandwidth that also helps.

To watch

In his Revue du RTX 5090, John Loeffler de Techradar calls it the supercar of graphics cards and asks if it was simply too powerful, suggesting that it is an absolute glout for power.

“It’s exaggerated”, he quips, “especially if you only want it for the game, because monitors who can really manage the images that this GPU can extinguish is probably years.”