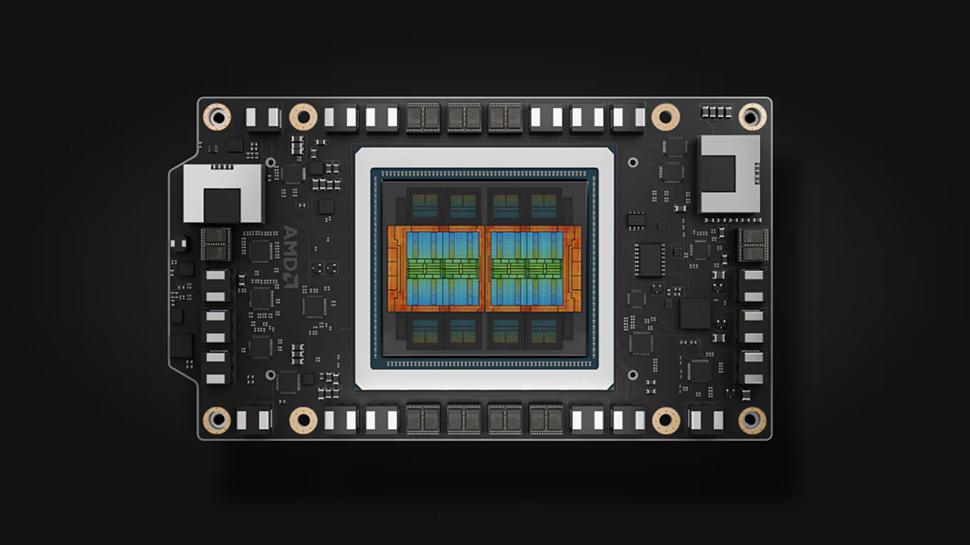

- AMD has highlighted the MI350 series to Hot Chips 2025 with an evolution of the node in Rack

- MI355X DLC Rack includes 128 GPU 36TB HBM3E and 2.6 Exafops

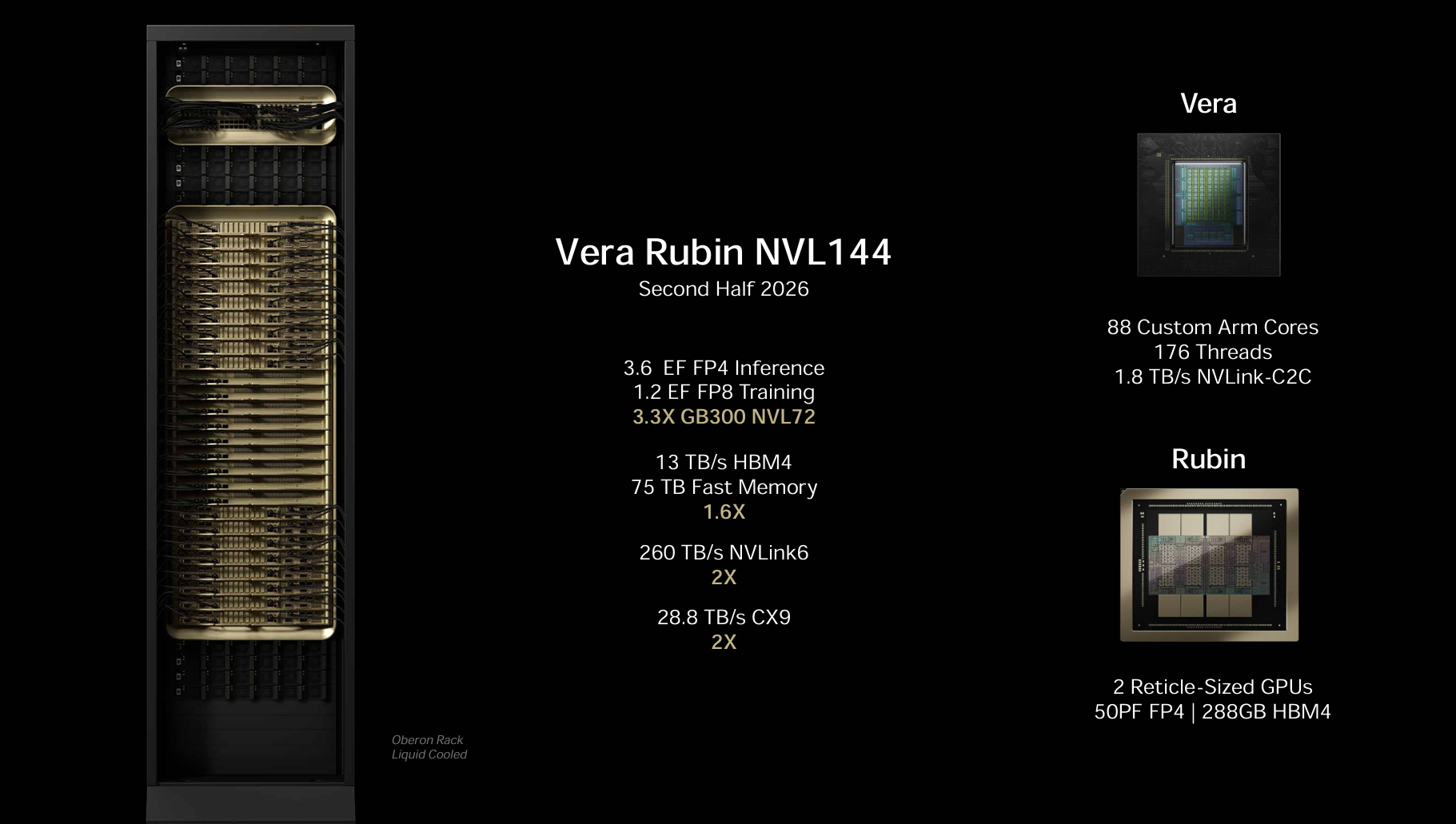

- The Vera Rubin system of Nvidia to come next year is a beast on a maximum scale

AMD used the recent Hot Chips 2025 event to talk more about Architecture DNC 4 which feeds its new MI350 Instinct series, and show how its accelerators evolve from node to rack.

The platforms of the MI350 series combine 5th generation EPCU processors, MI350 GPUs and Pollara AMD NICs in standard OCP conceptions with networking supported by UEC. The bandwidth is delivered by the infinity fabric up to 1075 GB / s.

At the upper end of this is the Rack MI355X DLC ‘Orv3’, a 2 or 128 GPU system, 36 TB of HBM3E memory and a cutting edge of 2.6 exaflops at FP4 Precision (there is also an EIA version of 96 GPU with 27 TB of HBM3E).

Here is Vera Rubin

At the node level, AMD presented flexible conceptions for air -cooled and liquid -cooled systems.

A MI350x platform with 8 GPUS reaches 73.8 Petaflops to FP8, while the MI355X platform cooled by liquid reaches 80.5 PETAFLOPS FP8 in a denser factor.

AMD also confirmed its roadmap. The flea giant started MI325X in 2024, the Mi350 family arrived earlier in 2025, and the MI400 instinct should appear in 2026.

The MI400 will offer up to 40 PETAFLOPS FP4, 20 PETAFLOPS FP8, 432 GB of HBM4 memory, 19.6 TB / S of bandwidth and 300 GB / S of the scale by GPU.

AMD says that the MI300 to MI400 performance curve shows accelerated gains, rather than incremental steps.

The elephant in the room is, of course, Nvidia, which plans its Rubin architecture for 2026-2027. The Vera Rubin NVL144 system, in pencil for the second half of next year, (according to slides shared by NVIDIA), will be evaluated for 3.6 Exaflops FP4 Inferences and 1.2 Exafops FP8 Formation. It has 13 TB / s of HBM4 bandwidth and 75 TB of quick memory, offering a gain of 1.6x on its predecessor.

NVIDIA incorporates 88 personalized arm processor cores with 176 threads, connected by 1.8 TB / S of NVINK-C2C, alongside 260 TB / S of NVLink6 and 28.8 to / s of interconnection CX9.

At the end of 2027, NVIDIA planned the Rubin Ultra NVL576 system. This will be assessed for 15 Exaflops FP4 Inferences and 5 Exaflops FP8 Formation, with 4.6 pb / s of HBM4E bandwidth, 365 TB of quick memory and 1.5PB / S interconnection speeds with NVLink7 and 115TB / S using CX9.

On a full scale, the Rubin system will include 576 GPU, 2304 HBM4E batteries totaling 150 TB of memory and 1,300 transistors, supported by 12,672 Vera processor cores, 576 NICS-9, 72 DPU Bluefield and 144 NVLink switches evaluated at 1500pb / s.

It is a closely integrated monolithic beast intended for a maximum scale.

Although it is fun to compare the AMD and Nvidia numbers, it is obviously not exactly right. The Rack DLC MI355X of AMD is a detailed product in 2025, while the Rubin systems of Nvidia remain roadmap conceptions for 2026 and 2027. Even so, it is an interesting overview of the way each company frames the next Watch of IA infrastructure.