- Low-latency networking becomes vital for faster, more efficient AI inference

- AMD’s Solarflare X4 Adapters Extend Proven Commercial Technology to Real-Time AI Environments

- Consistent sub-microsecond performance could improve reliability of cutting-edge data-driven applications

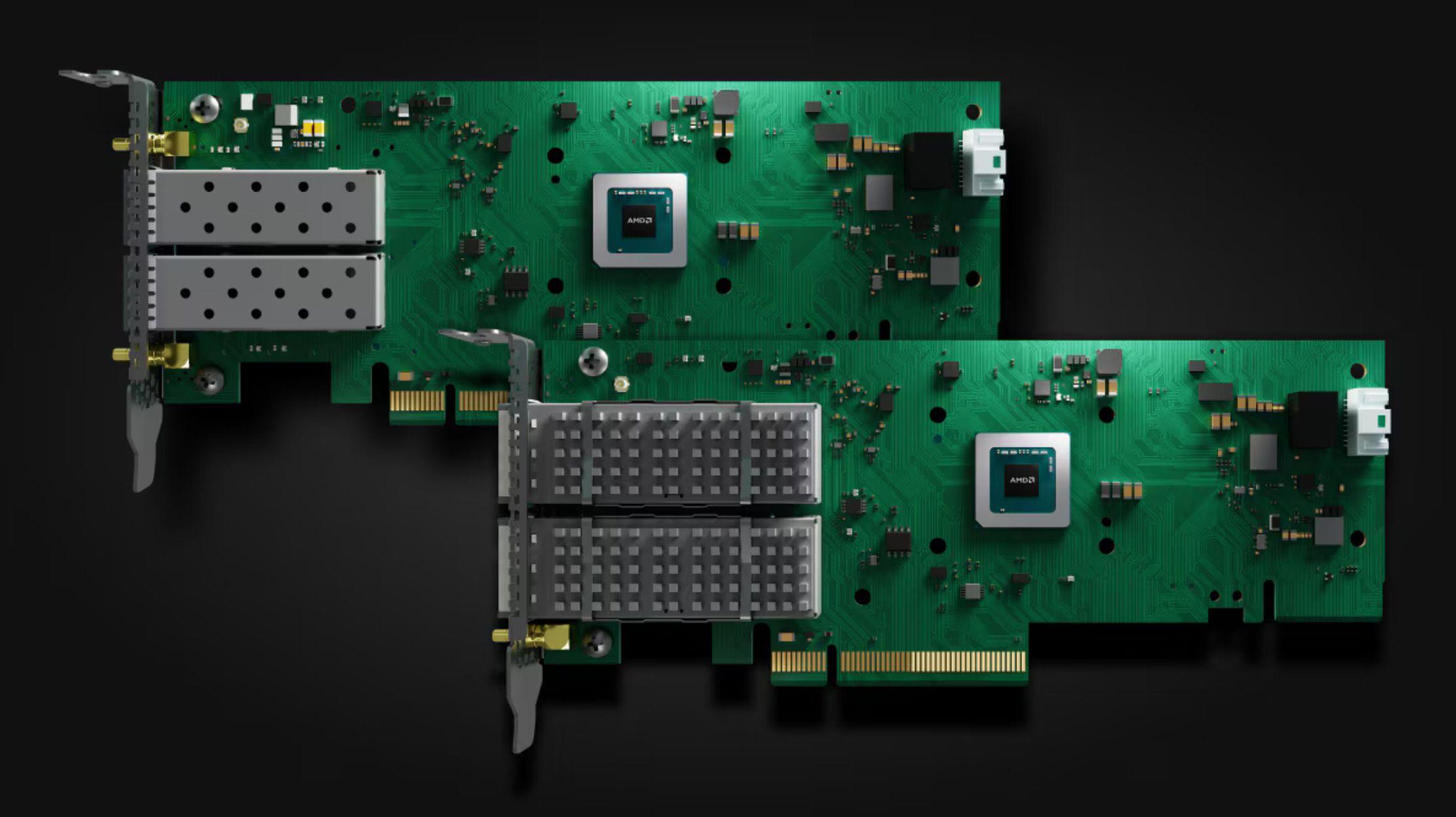

AMD has unveiled the Solarflare X4 Ethernet adapters, its next generation of ultra-low latency network interface cards.

Although these adapters were designed for high-frequency trading, their capabilities mean they could also play a role in AI inference workloads that demand rapid data movement and predictable response times.

Low latency is increasingly critical for AI inference when any delay can limit performance or accuracy.

Input-output programmed to cut-off

AI inference depends on moving data quickly between machines, and network speed obviously plays a major role in how quickly results appear.

The Solarflare X4 series builds on technology that has long served the financial industry. It includes two main models: the X4522, which supports two SFP56 ports up to 50 GbE each, and the X4542, which uses two QSFP56 ports up to 100 GbE.

Both feature a mode known as Cut Through Programmed Input Output, which begins transmitting packets before they completely pass through the PCIe bus, reducing processing delays.

While AMD adapters don’t match the raw speed of 400G or 800G networking hardware, they have the advantage of maintaining sub-microsecond latency with high consistency.

In addition to being an attractive choice for financial systems, they are also useful for emerging AI workloads that require real-time inference at the edge.

The adapters work with AMD’s Onload software, which can offload data movement tasks from the processor, freeing up processing power for inference tasks, calculations, analysis and control operations.

The benefit of reduced latency can translate into faster and more reliable responses for AI applications running in autonomous systems, in smart manufacturing or content delivery environments.

The Solarflare X4 series may be designed for specialized markets, but networking hardware optimized for speed and predictability could benefit a range of data-driven industries.

AMD’s move could prove to be one of its most strategic recent releases, bridging decades of expertise in low-latency networking with the growing demand for super-efficient AI inference.

Follow TechRadar on Google News And add us as your favorite source to get our news, reviews and expert opinions in your feeds. Make sure to click the Follow button!

And of course you can too follow TechRadar on TikTok for news, reviews, unboxings in video form and receive regular updates from us on WhatsApp Also.