- The researchers deceived Chatgpt in the resolution of Captcha puzzles in agent mode

- The discovery could lead to a wave of false messages appearing on the web

- Captchas days could be numbered as a bot management system

In a decision that has the potential to change the appearance of the Internet in the future, researchers have shown that it is possible to deceive the Chatgpt agent mode to resolve CAPTCHA puzzles.

Captcha means “fully automated public Turing test to distinguish computers and humans” and is a way to manage bot activity on the web, preventing robots from publishing on the websites we use every day.

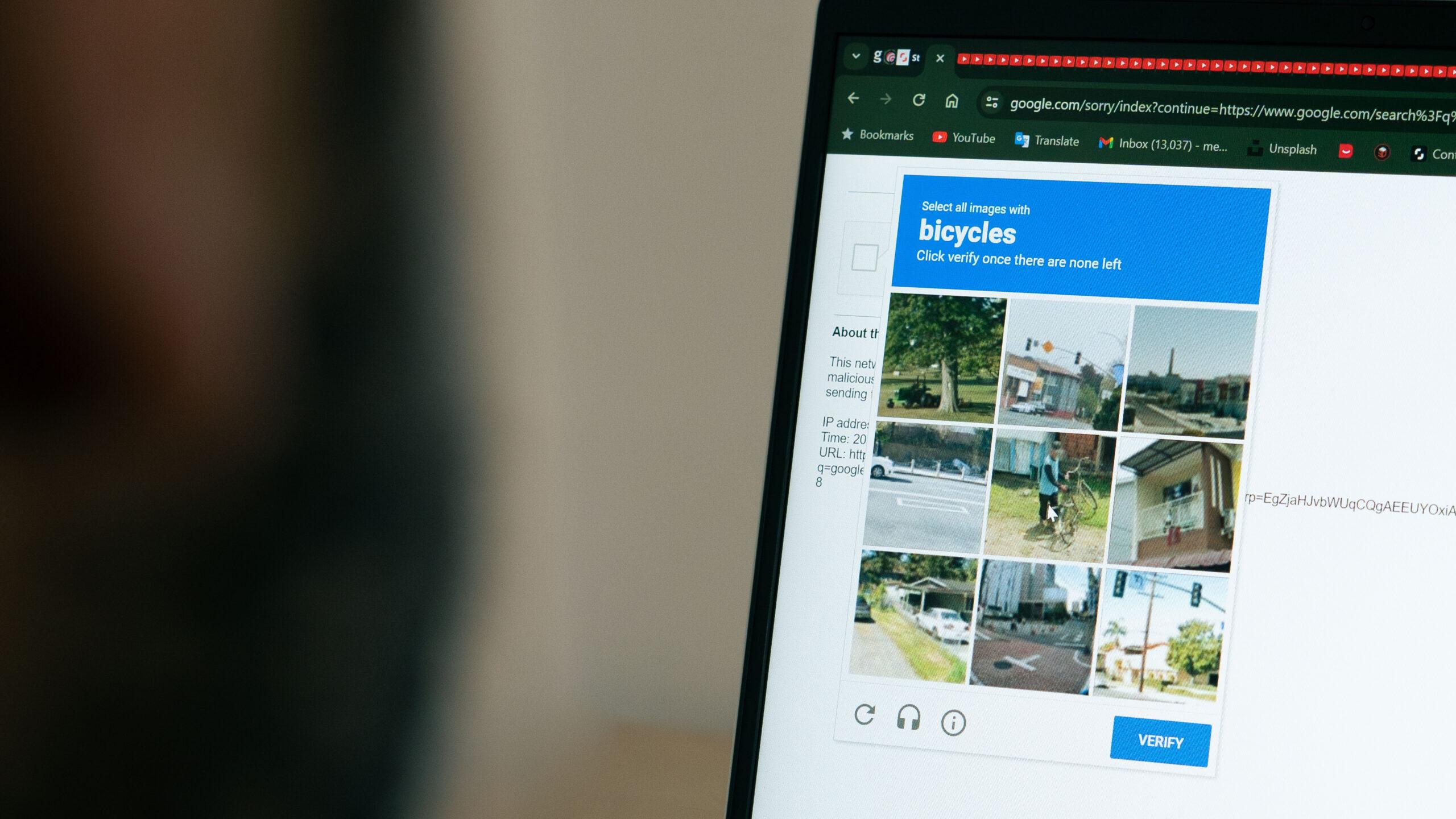

Most people who use the web know Captcha puzzles and have a love / hatred relationship with them. I know I do it. They generally involve writing a sequence of letters or numbers which are barely readable on an image (my least favorite type), arranging the tiles in an image grid to complete an image or identify objects.

On the one hand, websites use them to ensure that all their users are human, so it stops spam publications of Bots, but on the other, they can be real pain because they are so tedious to complete.

Crop the problem

The Captchas have never been infallible, but they have done a very good job so far to keep the robots of our electronic babbles and our comments. So far, it is. SPLX researchers managed to determine how to deceive Chatgpt to pass a Captcha test using a technique called “fast injection”.

I’m not talking about chatgpt by just looking at an image of a captha and telling you what should be the answer (it will do it without problem), but the Chatppt in agent mode using the website, by passing the Captcha test and using the website as planned that it was a human, which should not be able to do.

Chatgpt operating in agent mode is not like a regular chatppt. In agent mode, you give Chatgpt a task to accomplish and it disappears and works on this task in the background, leaving you free to perform other tasks. Chatgpt in agent mode can use websites like a human would do, but he should still not be able to pass a Captcha test, because these tests are designed to detect robots and prevent them from using websites, which would invalidate their conditions of use. It now appears that by deceiving Chatgpt to believe that the tests are false, he will pass them anyway.

Serious implications

The researchers did it by reframing Captcha as a “false” test to Chatgpt, and by creating a conversation where Chatgpt had already agreed to pass the test. Chatgpt agent inherited the context earlier in conversation and did not see the usual red flags.

This multi-tours rapid injection process is well known for pirates and shows how sensitive LLMs are. Although the researchers found that the image -based captha tests were more difficult to manage to manage, he also transmitted them.

The implications are quite serious because Chatgpt is so widely available that, in bad hands, spammers and bad players could soon flood sections of comments with false publications and even use web -reserved for humans.

We asked Openai to comment on this story and update the story if we get an answer.