- D-matrix changes are concentrated from AI formation to material innovation of inference

- The Corsair uses LPDDR5 and SRAM to reduce HBM dependence

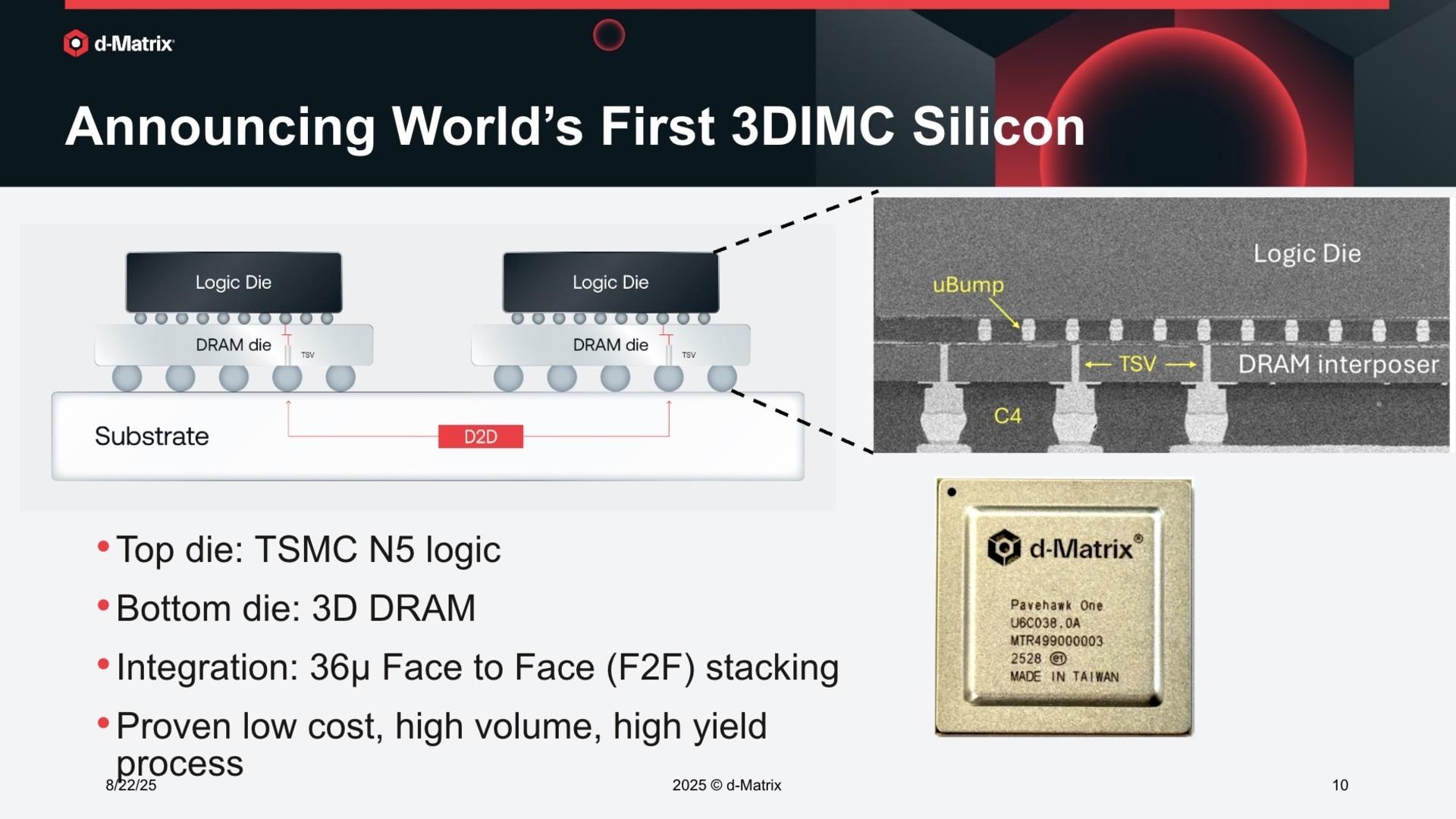

- Pavehawk combines the dram and logic stacked for lower latency

Sandisk and SK Hynix recently signed an agreement to develop “High Bandwidth Flash”, an alternative based on HBM NAND designed to provide a greater and non -volatile capacity in AC accelerators.

D-Matrix is now positioned as a challenger with wide-banding memory in the race to speed up the workloads of artificial intelligence.

While a large part of the industry focused on training models using HBM, this company has chosen to focus on AI inference.

A different approach to the memory wall

Its current design, the D-Matrix Corsair, uses an architecture based on chiplet with 256 GB of LPDDR5 and 2 GB of SRAM.

Rather than chasing more expensive memory technologies, the idea is to co-make acceleration and dram engines, creating a stricter link between calculation and memory.

This technology, called D-Matrix Pavehawk, will be launched with 3DIMC, which should compete with HBM4 for AI inference with 10x bandwidth and energy efficiency by battery.

Built on a logical matrix TSMC N5 and combined with a 3D trapped dram, the platform aims to bring the calculation and memory closer to the conventional provisions.

By eliminating some of the bottle transfer bottles, the matrix suggests that it could reduce both latency and energy consumption.

By looking at its technological path, D-Matrix seems determined to superimpose several DRAM matrices above logical silicon to push the bandwidth and the capacity.

The company argues that this stacked approach can provide an order of magnitude in performance gains while using less energy for data movement.

For an industry struggling with the limits of memory interfaces on a scale, the proposal is ambitious but remains unproven.

It should be noted that memory innovations around inference accelerators are not new.

Other companies have experienced closely coupled memory and calculation solutions, including conceptions with integrated controllers or links via interconnection standards such as CXL.

D-Matrix, however, tries to go further by integrating personalized silicon to rework the balance between cost, power and performance.

The backdrop of these developments is the persistent challenge of costs and the offer surrounding the HBM.

Large players such as NVIDIA can secure high -level HBM parts, but small businesses or data centers must often be satisfied with modules at lower speed.

This disparity creates an unequal playing field where access to the fastest memory directly shapes competitiveness.

If the matrix D can actually deliver alternatives at a lower cost and higher capacity, it would address one of the central pain points of scaling inference at the level of the data center.

Despite the claims of “10x best performance” and “10x best energy efficiency”, the matrix D is still at the start of what she describes as a multi -year trip.

Many companies have tried to attack the so-called wall of memory, but few have reshaped the market in practice.

The rise in AI tools and dependence on each LLM show the importance of evolutionary inference material.

However, whether Pavehawk and Corsair will mature in largely adopted alternatives or remain experimental.

Via serving the house