- The fastest manufacturer of the world has a splash with deep integration

- Cerebras says that the solution will classify 57x faster than on GPUs but does not mention which GPU

- Deepseek R1 will operate on Cerebras Cloud and the data will remain in the United States

Cerebras has announced that he would support Deepseek in a not so surprised decision, more specifically the R1 70B reasoning model. This decision comes after Groq and Microsoft confirmed that they would also bring the new child of the AI block in their respective clouds. AWS and Google Cloud have not yet done so, but anyone can run the open source model anywhere, even locally.

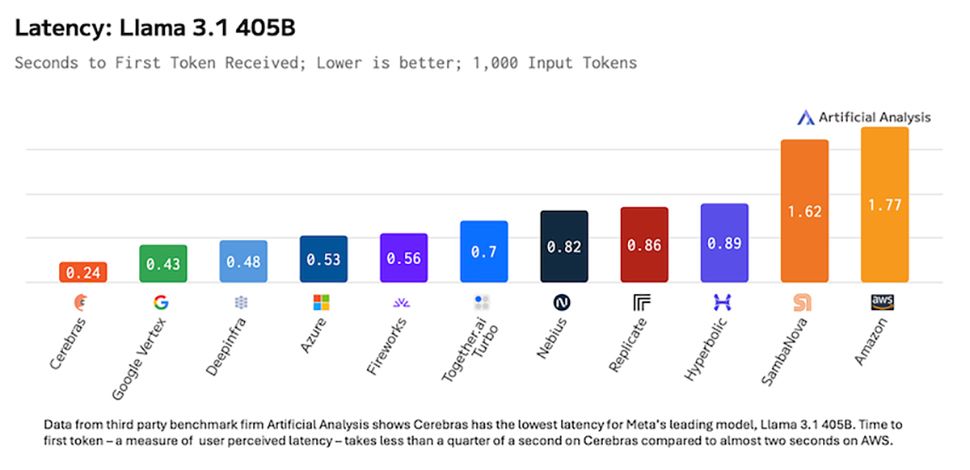

The specialist in an inference chips AI will execute Deepseek R1 70B to 1,600 tokens / second, which, according to him, is 57x faster than any R1 supplier using GPUs; We can deduce that 28 tokens / seconds are what the GPU-in-the-Cloud solution (in this case Deepinfra) apparently reaches. Originally, the last Cerebras chip is 57x larger than the H100. I contacted Cerebras to find out more about this assertion.

The search for Cerebras also demonstrated that Deepseek is more precise than the OpenAi models on a certain number of tests. The model will operate on material cerebras in data centers based on the United States to appease confidentiality concerns that many experts have expressed. DEEPSEEK – The application – will send your data (and metadata) to China where it will most likely be stored. Nothing surprising here because almost all applications – especially free – capture user data for legitimate reasons.

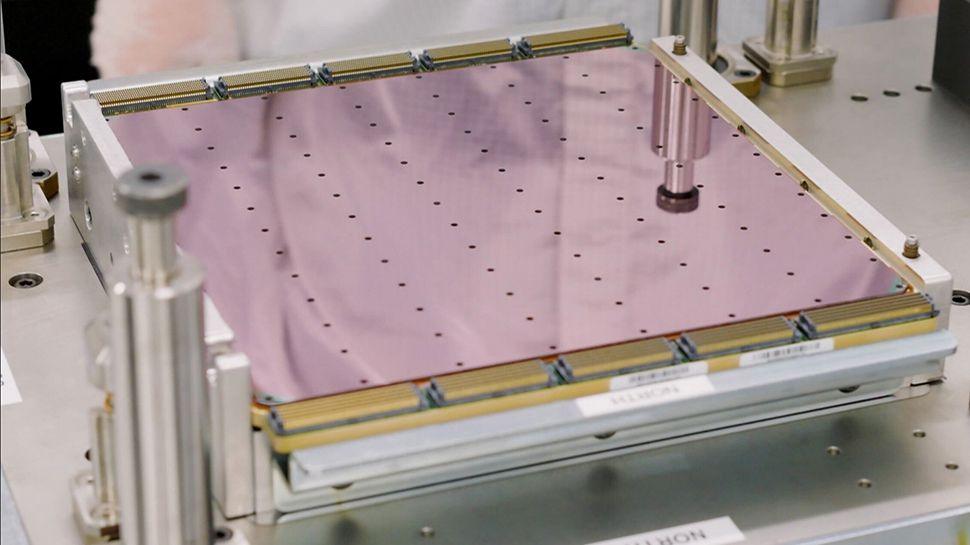

The solution on the scale of the Cerebras brochure positions it only to benefit from the boom of the inference of the immense AI clouds. WSE-3, which is the fastest (or HPC accelerator) chip in the world, has nearly a million hearts and narcotic transistors of four Billions. More importantly, it has 44 GB of SRAM, which is the fastest memory available, even faster than HBM found on the GPUs of Nvidia. Given that WSE-3 is only a huge mat, the available memory band is enormous, several orders of magnitude larger than what the Nvidia H100 (and besides the H200) can bring together.

A prize war is prepared before the launch of the WSE-4

No price has yet been disclosed, but the deceptions, which are generally shy about this particular detail, disclosed last year that Llama 3.1 405b on the inference of deceptions would cost $ 6 / million entry entrances And the $ 12 / million exit tokens. Expect that Deepseek is available for much less.

WSE-4 is the next iteration of WSE-3 and will provide a significant boost in the performance of Deepseek and similar reasoning models when it should be launched in 2026 or 2027 (depending on market conditions).

The arrival of Deepseek is also likely to shake the tree of Proverbial AI money, bring more competition to established players like Openai or Anthropic, lowering prices.

A quick overview of the Docsbot.Ai LLM API calculator shows that OpenAi is almost always the most expensive in all configurations, sometimes several orders of magnitude.