- Google adds research on gestures to its Google Lens functionality

- The update arrives on Chrome and Google on iOS applications

- The company also works on more AI capabilities for the objective

If you use Chrome or Google applications on your iPhone, there is now a new way of quickly finding information based on everything on your screen. If it works well, it could end up saving time and making your research easier.

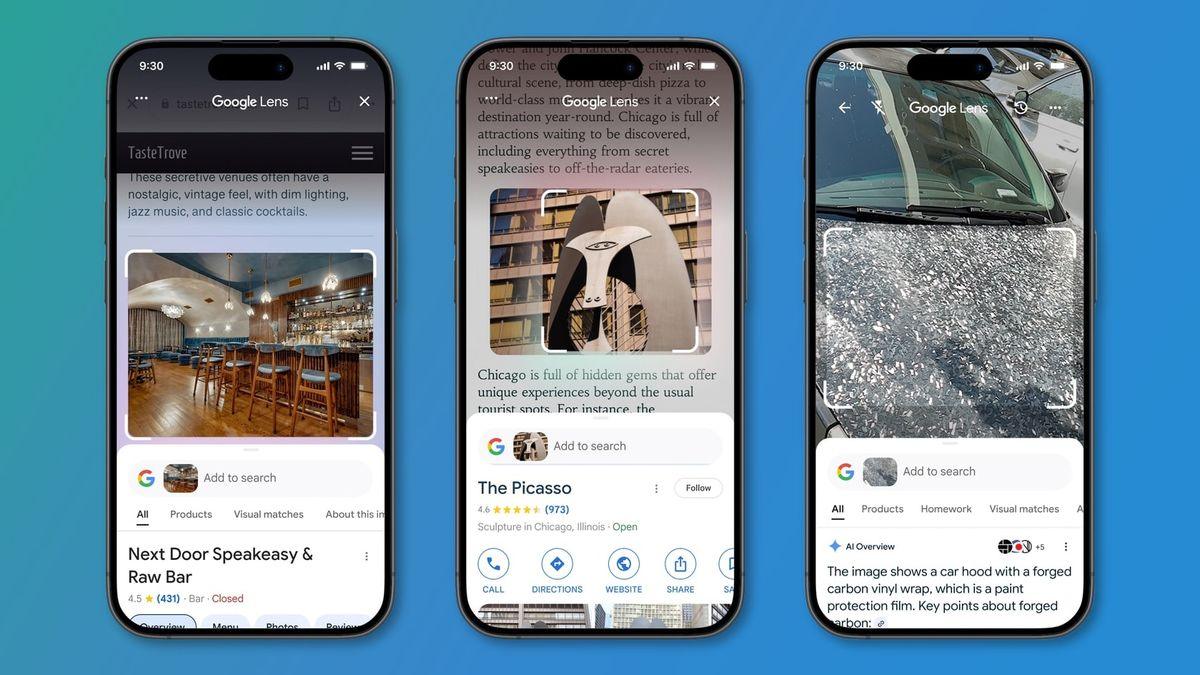

The update concerns Google Lens, which allows you to search using images rather than words. Google says you can now use a gesture to select something on the screen, then search for it. You can draw around an object, for example, or type it to select it. It works that you read an item, you buy something new or watch a video, as Google explains.

The best iPhones have a similar feature for some time, but it has always been an unofficial bypass solution that required using the action button and the shortcut application. Now it is an integrated feature in some of the most popular iOS applications available.

Chrome and Google on iOS applications have already integrated Google Lens, but the past implementation was a little more clumsy than today’s update. Before, you had to save an image or take a screenshot, then download it on Google Lens. This would potentially involve using multiple applications and was much more hassle. Now a quick gesture is everything you need.

How to use the new Google goal on iPhone

When using the Chrome or Google apps, press the three -point menu button, then select Search screen with Google Lens Or Search for this screenrespectively. This will put a colorful superposition on the web page you are currently consulting.

You will see a box at the bottom of your screen by reading: “Cap or press anywhere to search.” You can now use a gesture to select an item on the screen. This will automatically look for the selected object using Google Lens.

The new gesture feature will take place on a global scale this week and will be available in Chrome and Google on iOS applications. Google has also confirmed that it would add a new objective icon to the application bar in the future, which will give you another way to use gestures in Google Lens.

Google added that it also takes advantage of artificial intelligence (AI) to add new capacities to the objective. This will make it possible to look for newer or unique subjects, and it will mean that the previews of Google AI appear more frequently in your results.

This feature will also be deployed this week and will arrive at English -language users in countries where IA previews are available. For the moment, it should first arrive in the Google app for Android and iOS, with the Chrome office and mobile availability arriving later.