Google Search is under pressure – not only many of us replace it with the search for chatgpt, but Google attempts to avoid competition with features such as AI’s overviews have also turned due to certain disturbing inaccuracies.

This is why Google has just given the search for its greatest overhaul for over 25 years to Google I / O 2025. The era of `Ten Blue Links ” ends, Google now giving its AI mode (previously hidden in its laboratory experiences) a broader deployment in the United States.

AI mode was far from the only news of this year’s IS / S – so if you are wondering what the next 25 years of `Google ”, here are all the new research features that Google has just announced.

A warning word: beyond IA mode, many features will only be available for laboratory testers in the United States-so if you want to be among the first to try them “in the coming weeks”, activate the AI mode experience in laboratories.

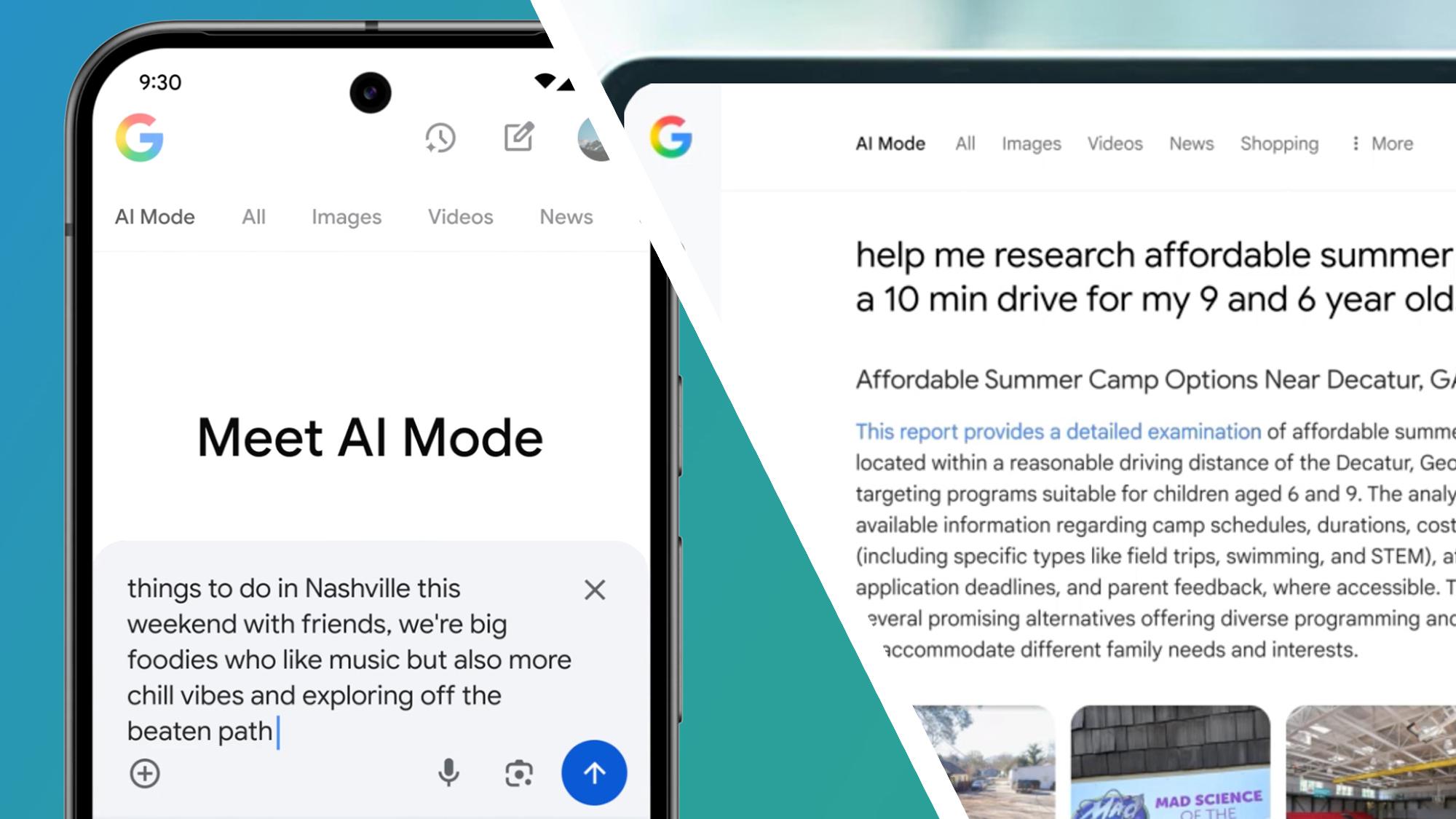

1. AI mode in research takes place to everyone in the United States

Yes, Google has just removed stabilizers from its AI mode for research – which was previously only available in laboratories for the first testers – and deployed it to everyone in the United States. There is no word yet to what happens in other regions.

Google says that “in the coming weeks” (which seems vaguely disturbing), you will see the mode you appear as a new tab in Google search on the web (and in the search bar of the Google application).

We have already tried the IA mode and concluded that “it could be the end of the research as we know it”, and Google says that it has referred it since then – the new version is apparently powered by a personalized version of Gemini 2.5.

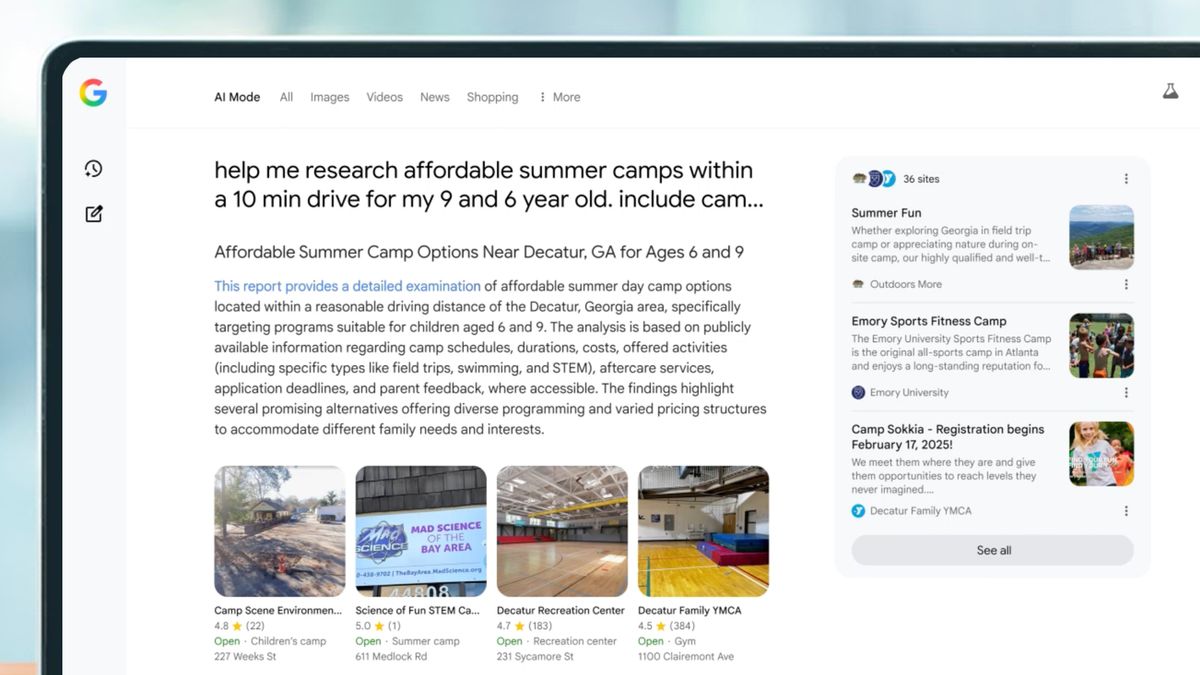

2. Google also has a new AI “search deep” mode

Many AI chatbots – including Chatppt and Perplexity – now offer a deep search mode for longer research projects that require a little more than a quick Google. Well, Google now has its own equivalent for research called, yes, “deep research”.

Available in the “in the coming months” laboratories (always the most vague of the liberation windows), Deep Search is a feature in AI mode which is based on the same “fan-out-out” technique of “request” as this wider mode, but according to Google, takes it at “following”.

In reality, this should mean an “expert and fully cited level report” (known as Google) in a few minutes, which looks like a big time – as long as the precision is a little better than the previews of the Google AI.

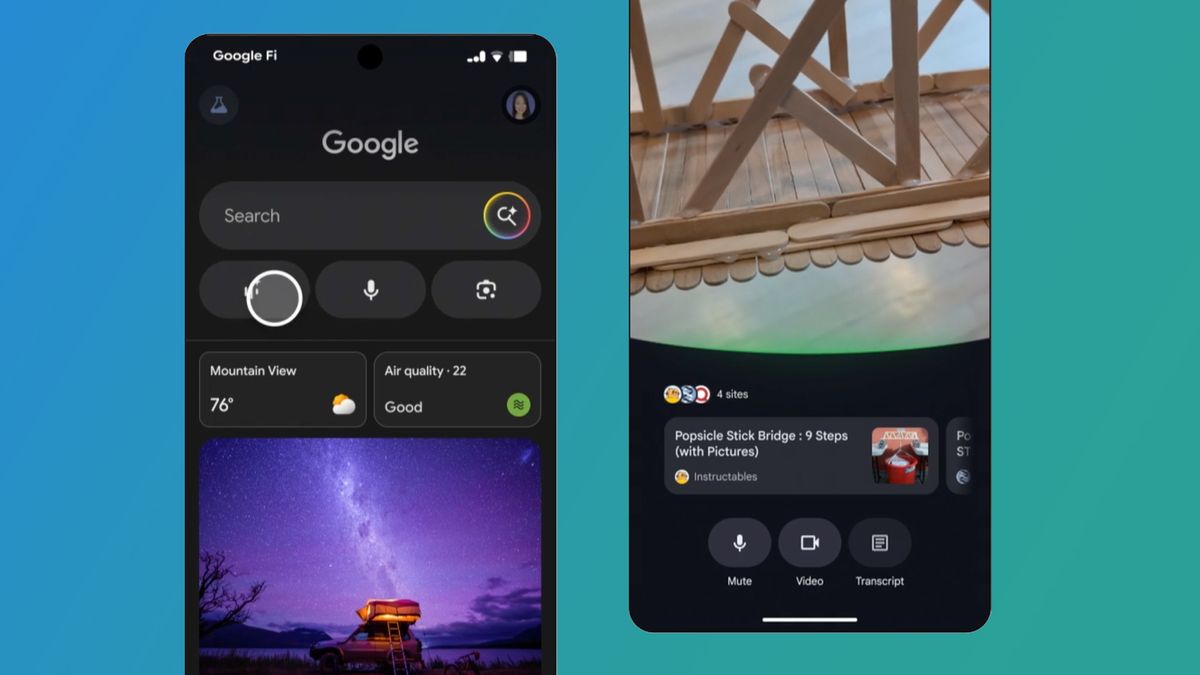

3. Search Live allows you to google quiz with your camera

Google already allows you to ask questions about the world with Google Lens, and has demonstrated its Astra Universal Assistant project at Google I / O 2024. Well, it is now folding Astra in Google search so that you can ask questions in real time using the camera of your smartphone.

“ Search Live ” is another functionality of laboratories and will be marked by an “live” icon in AI mode of Google or in Google Lens. Press it and you can point your camera and have a back and forth conversation with Google of what is in front of you, while making you links with more information.

The idea sounds well in theory, but we do not yet try it beyond its Incarnation Prototype last year and the Multimodal IA project is based on the cloud, so your mileage can vary depending on where you use it. But we are delighted to see how far this takes place in the past year with this new laboratory version in research.

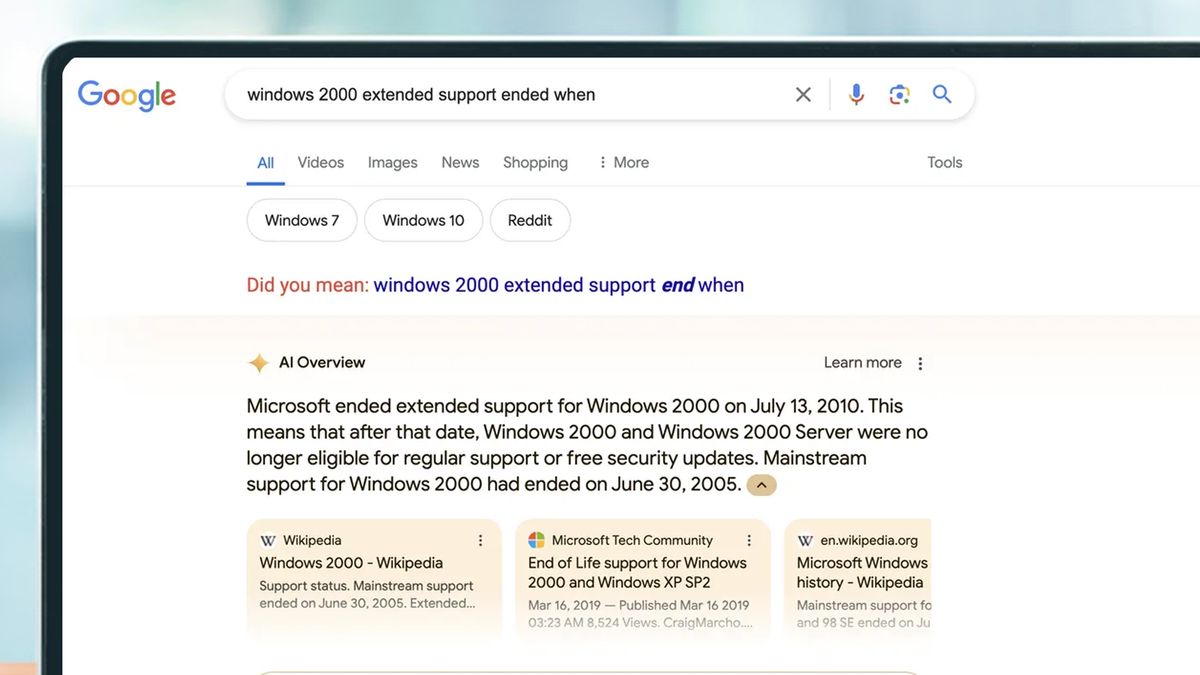

4. AI glimps become global

We are not really wild on the IA glimps, which are the small paragraphs generated by the AI that you often see at the top of your search results. They are sometimes inaccurate and have resulted in infamous clangers, as recommending to people to add glue to their pizzas. But Google plows them and announced that IA’s overviews are becoming a wider deployment.

The new extension means that functionality will be available in more than 200 countries and territories and more than 40 languages worldwide. In other words, this is the new normal for Google research, so we would better get used to it.

Liz Reid (VP, research manager) of Google recognized in a press briefing before Google I / O 2025 that AI previews have been a learning experience, but said they have improved since these first incidents.

“Many of you may have seen that a set of problems arose last year, although they are very educational and quite rare, we have also taken them very, very seriously and have made a lot of improvements since then,” she said.

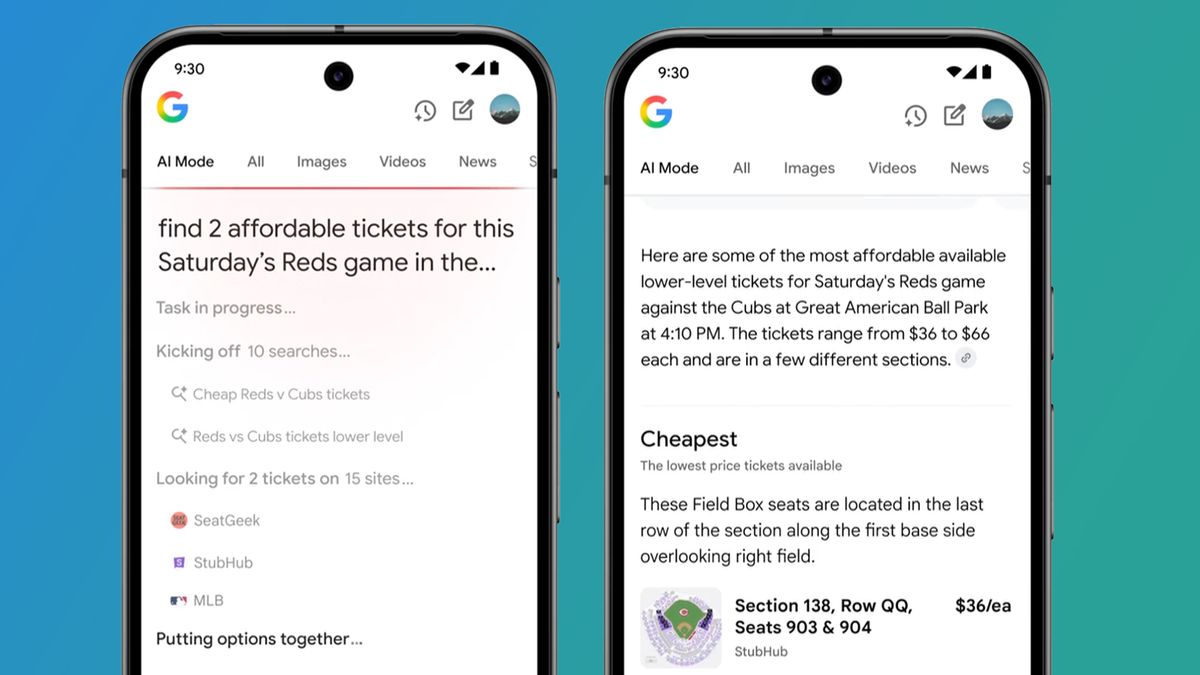

5. Google Search will soon be your ticket purchase agent

Find and buy tickets and always something painful experience in Google search. Fortunately, Google promises a new mode powered by Project Mariner, which is an AI agent who can surf the web like a human and complete task.

Rather than a separate feature, it will apparently live in AI mode and learn when asking questions like “finding two affordable tickets for the Reds game this Saturday at the lower level”.

This will see him rush and analyze hundreds of ticket options with real -time prices. It can also fill out forms, leaving you the simple task of hitting the “purchase” button (in theory, at least).

The only drawback is that it is another Google’s laboratory projects that will be launched “in the coming months”, then who knows when we really see it in action.

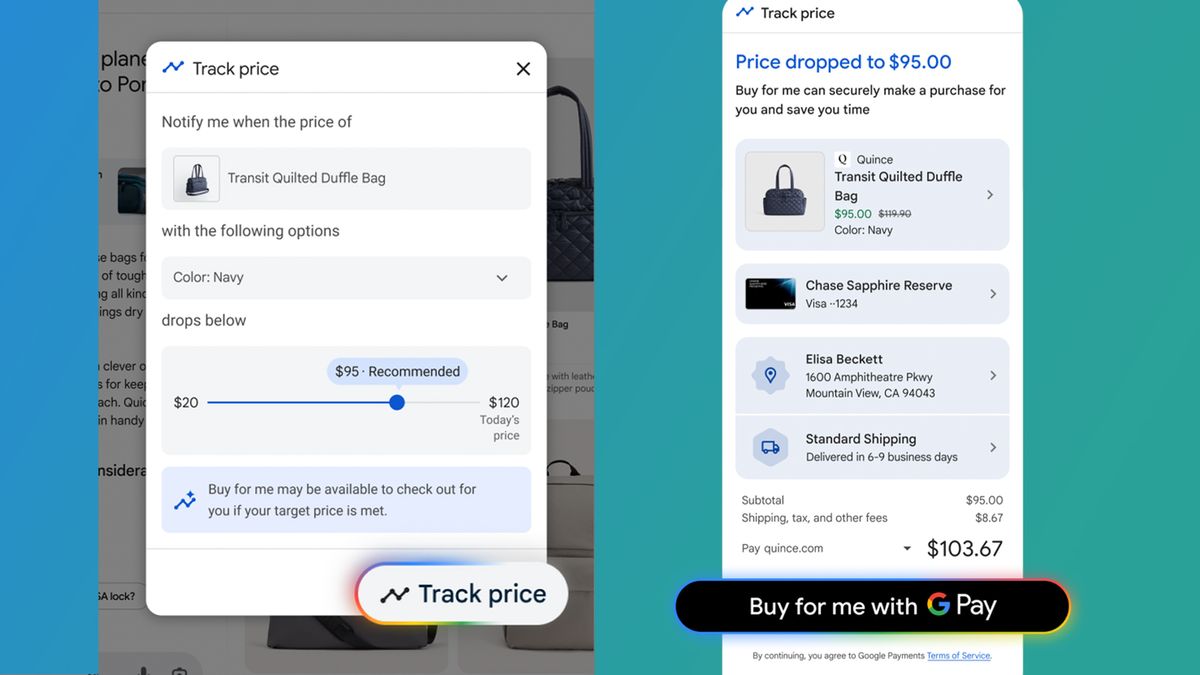

Google gave its purchasing tab in Google looking for great updating in October 2024, and now many of these features get another boost thanks to a new integration with IA mode.

The functionality “ Virtual Try-on ” (which allows you to download a photo of yourself to see how new clothes could look at you) is back, but the biggest functionality is a payment function powered by the AI that follows the prices for you, then buy things on your behalf using Google Pay when the price is correct (with your confirmation, of course).

We are not sure that this will help cure our equipment acquisition syndrome, but it also has potential for saving time (and savings).

7. Google Search becomes even more personalized (if you wish)

Like traditional research, Google’s new IA mode will offer suggestions according to your previous research, but you can also make it much more personalized. Google says you can connect it to some of its other services, including Gmail, to help respond to your requests with a more personalized personal button.

An example Google has been to ask for AI mode for “things to do in Nashville this weekend with friends”. If you have connected it to other Google services, it could use your previous restaurant reservations and your research to tip the results to restaurants with outdoor seats.

There are obvious problems here – for many, this can be an invasion of privacy too far, so they will probably not choose to connect it to other services. In addition, these “personal context” powers seem to have the problem of the “echo chamber” to assume that you always want to repeat your previous preferences.

However, this could be another practical development of the search for some, and Google says that you can always manage your personalization settings at any time.