- Ironwood TPU from Google to 9216 chips with a Record shared memory of 1.77PB

- The Dual Die architecture provides 4614 tflops FP8 and 192 GB HBM3E per chip

- The improved features of reliability and the design features assisted by AI allow effective workloads to large -scale inference

Google closed automatic learning sessions during the recent Hot Chips 2025 event with a detailed overview of its new tensor processing unit, Ironwood.

The chip, which was revealed for the first time on Google Cloud 25 next in April 2025, is the first TPU of the company designed mainly for large -scale workloads, rather than for training, and arrives as seventh generation of TPU equipment.

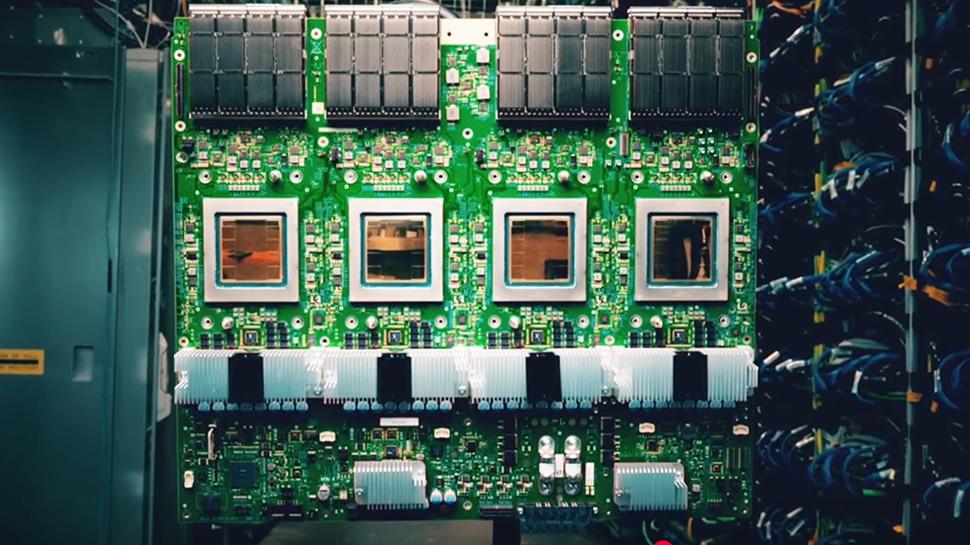

Each Ironwood chip incorporates two calculation matrices, offering 4,614 FP8 performance tflops – and eight HBM3E batteries provide 192 GB of memory capacity per chip, associated with a bandwidth of 7.3 TB / s.

1.77pb of HBM

Google has integrated 1.2 tops of E / S bandwidth to allow a system to extend up to 9,216 chips per pod without glue logic. This configuration reaches a huge 42.5 performance exaflops.

The memory capacity also evolves impressively. Through a pod, Ironwood offers 1.77PB of HBM directly addressed. This level defines a new recording for shared memory supercomputers and is activated by optical circuit switches connecting racks.

The equipment can reconfigure around the failing nodes, in the restaurant of workloads from control points.

The chip includes several features intended for stability and resilience. This is in particular a root of confidence on chip, integrated auto-test functions and measures to mitigate the corruption of silent data.

Logical repair functions are included to improve manufacturing performance. The focus on RAS, or reliability, availability and ease of service is visible throughout the architecture.

Cooling is managed by a cold plate solution supported by the third generation of Google’s liquid cooling infrastructure.

Google claims a double improvement in watt performance compared to trillium. Dynamic tension and frequency scale further improves efficiency during workload varieties.

Ironwood also incorporates AI techniques in its own design. It was used to help optimize the aluminum circuits and the floor plan.

A fourth generation sparsecore has been added to accelerate interest and collective operations, supporting workloads such as recommendation engines.

Deployment is already underway at Hyperscale in Google Cloud Data Centers, although TPU remains an internal platform not available directly for customers.

Commenting on the Hot Chips 2025 session, Serve“Ryan Smith said:” It was an impressive presentation. Google has seen the need to create a high -end AI There are several generations. Now, the company innovates at all levels, chips, interconnections, and physical infrastructure.