- The system connects remote facilities to continuously run massive training workloads.

- High-speed fiber keeps GPUs active avoiding slow data bottlenecks

- Two-stage chip density increases computing power while reducing inter-rack latency

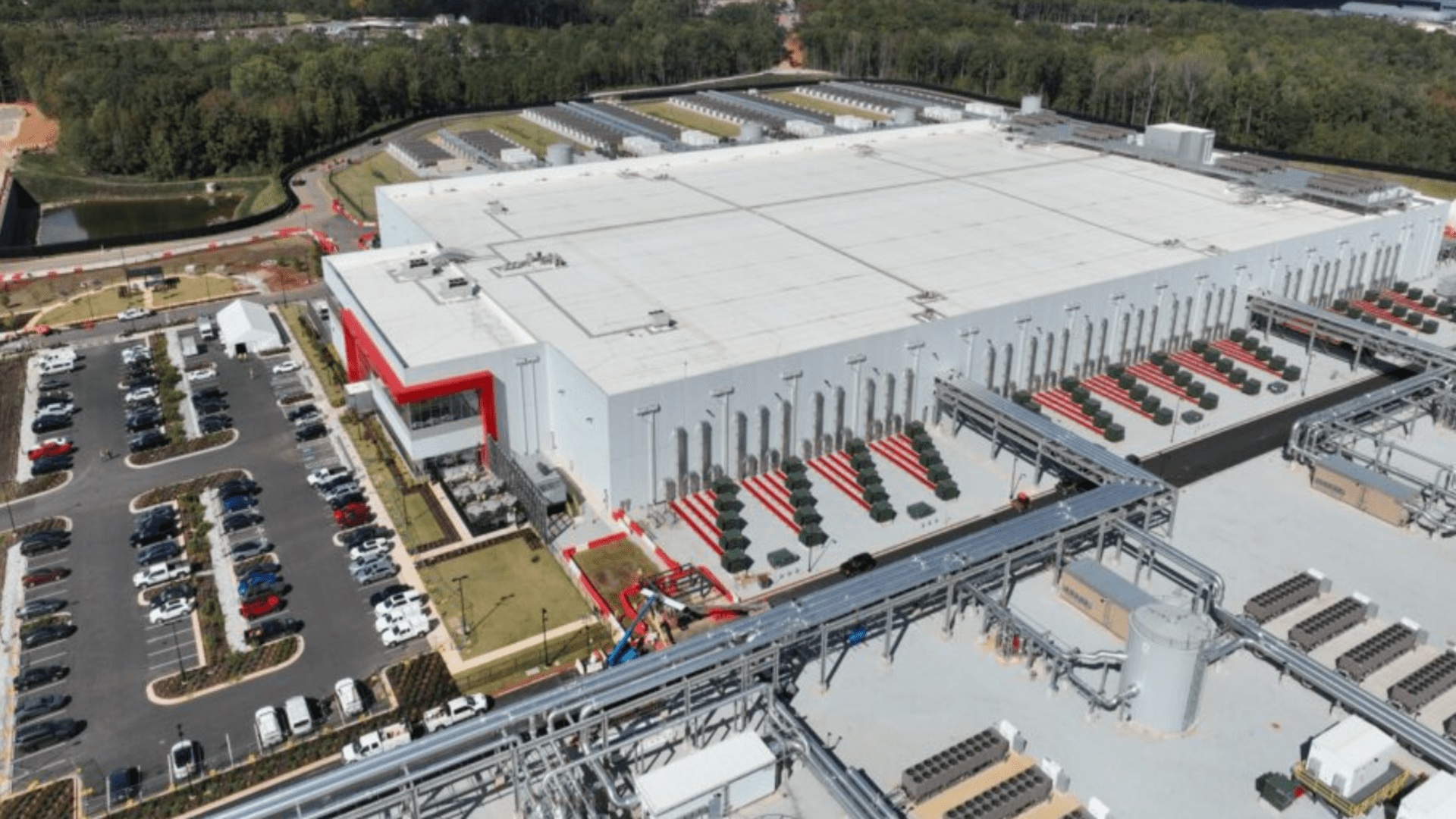

Microsoft has unveiled its first AI superfactory, connecting large AI data centers in Wisconsin and Atlanta via a dedicated fiber optic network designed for high-speed movement of training data.

The design places the chips close together on two stages to increase density and reduce lag.

It also uses extensive wiring and fluid systems designed to handle the weight and heat produced by large groups of hardware.

A network built for large-scale model training

In a blog post, Microsoft said this setup will support large AI workloads that differ from the smaller, more isolated tasks common in cloud environments.

“It’s about building a distributed network that can act as a virtual supercomputer to solve the world’s biggest challenges,” said Alistair Speirs, Microsoft’s general manager of Azure infrastructure.

“The reason we call it an AI superfactory is because it performs a complex task on millions of hardware components… it’s not just a single site training an AI model, it’s a network of sites supporting that task.”

The AI WAN system moves information thousands of kilometers using dedicated fiber, partly newly built and partly reused from previous acquisitions.

Network protocols and architecture have been adjusted to shorten paths and keep data flowing with minimal delay.

Microsoft says this allows remote locations to cooperate on the same model training process in near real time, with each location contributing its share of computation.

The emphasis is on maintaining continuous activity on a large number of GPUs so that no unit stops waiting for results from another location.

“Being a leader in AI isn’t just about adding more GPUs, it’s also about building the infrastructure that makes them work together as one system,” said Scott Guthrie, executive vice president of Microsoft Cloud + AI.

Microsoft uses the Fairwater configuration to support high-throughput rack systems, including Nvidia GB200 NVL72 units designed to fit very large Blackwell GPU clusters.

The company pairs this equipment with liquid cooling systems that send the heated fluid outside the building and return it at lower temperatures.

Microsoft says operational cooling uses almost no new water, other than periodic replacement when necessary for chemical control.

The Atlanta site mirrors the Wisconsin layout, providing consistent architecture across multiple regions as more facilities come online.

“To improve the capabilities of AI, you need to have more and more infrastructure to train it,” said Mark Russinovich, CTO, deputy CISO and technical researcher at Microsoft Azure.

“The amount of infrastructure required today to train these models is not just one data center, nor two, but multiple multiples. »

The company positions these sites as being purpose-built for training advanced AI tools, citing increased parameters and larger training data sets as key pressures driving expansion.

The facilities integrate exabytes of storage and millions of CPU cores to support tasks related to core training workflows.

Microsoft suggests that this scale is necessary so that partners such as OpenAI and its own AI superintelligence team can continue model development.

Follow TechRadar on Google News And add us as your favorite source to get our news, reviews and expert opinions in your feeds. Make sure to click the Follow button!

And of course you can too follow TechRadar on TikTok for news, reviews, unboxings in video form and receive regular updates from us on WhatsApp Also.