- HBF offers ten times the capacity of HBM while remaining slower than DRAM

- GPUs will access larger data sets via tiered HBM-HBF memory

- Writes to HBF are limited, forcing the software to focus on reads

The explosion of AI workloads has put unprecedented pressure on memory systems, forcing companies to rethink how they deliver data to accelerators.

High-bandwidth memory (HBM) served as a fast cache for GPUs, allowing AI tools to efficiently read and process key-value (KV) data.

However, HBM is expensive, fast, and has limited capacity, while high-bandwidth flash (HBF) offers much greater volume at slower speeds.

How HBF complements HBM

HBF’s design allows GPUs to access a larger data set while limiting the number of writes, approximately 100,000 per module, which requires the software to prioritize reads over writes.

HBF will integrate alongside HBM near AI accelerators, forming a tiered memory architecture.

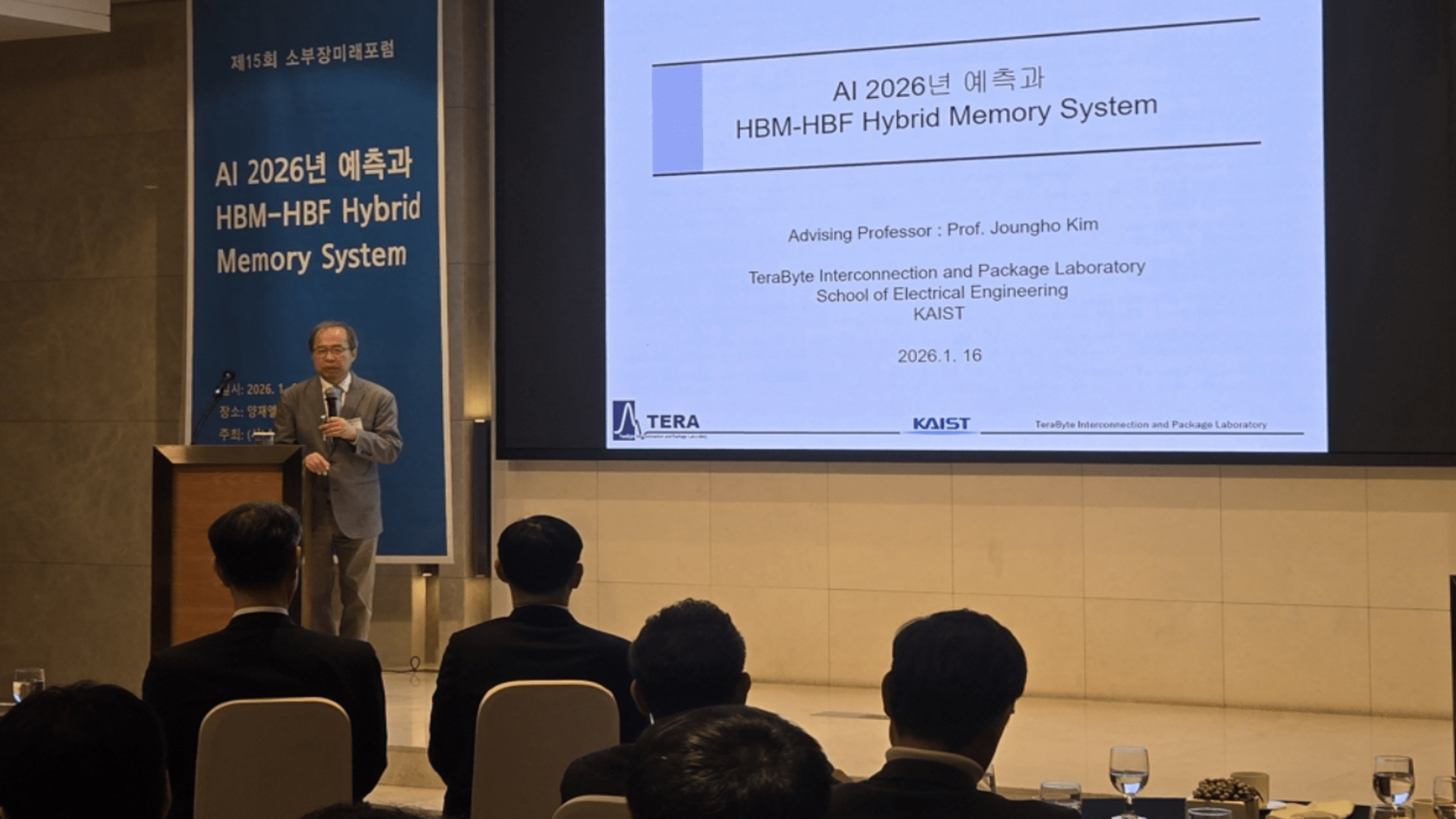

Professor Kim Joungho of KAIST compares HBM to a library at home for quick study, while HBF functions like a library with much more content but slower access.

“For a GPU to perform AI inference, it needs to read variable data called KV cache from the HBM. Then it interprets it and spits it out word by word, and I think it will use the HBF for this task,” Professor Kim said.

“HBM is fast, HBF is slow, but its capacity is about 10 times larger. However, while HBF has no limit on the number of reads, it has a limit on the number of writes, about 100,000. Therefore, when OpenAI or Google write programs, they must structure their software so that it focuses on reads.”

HBF is expected to debut with HBM6, where multiple HBM stacks interconnect in a network, increasing both bandwidth and capacity.

The concept envisions future iterations like HBM7 operating as a “memory factory,” where data can be processed directly from HBFs without going through traditional storage networks.

HBF stacks multiple 3D NAND chips vertically, similar to HBM stackable DRAM, and connects them with through-hole silicon vias (TSVs).

A single HBF unit can achieve a capacity of 512 GB and a bandwidth of up to 1,638 TB/s, far exceeding the standard speeds of NVMe PCIe 4.0 SSDs.

SK Hynix and Sandisk presented diagrams showing the upper NAND layers connected via TSVs to a base logic chip, forming a functional stack.

HBF prototype chips require careful manufacturing to avoid distortion in lower layers, and additional NAND stacks would further increase the complexity of TSV connections.

Samsung Electronics and Sandisk plan to combine HBF with Nvidia, AMD and Google AI products over the next 24 months.

SK Hynix will release a prototype later this month, while the companies are also working on standardization through a consortium.

HBF adoption is expected to accelerate in the HBM6 era, and Kioxia has already prototyped a 5TB HBF module using PCIe Gen 6 x8 at 64Gbps. Professor Kim predicts that the HBF market could surpass that of HBM by 2038.

Via Sisa Newspaper (originally in Korean)

Follow TechRadar on Google News And add us as your favorite source to get our news, reviews and expert opinions in your feeds. Make sure to click the Follow button!

And of course you can too follow TechRadar on TikTok for news, reviews, unboxings in video form and receive regular updates from us on WhatsApp Also.