- Connectx-8 from NVIDIA offers an inspired design of the GPU for networking

- Connectx-8 offers an impressive 800 GOPS flow capacity

- Requires PCIe Gen6 X16 for optimal performance

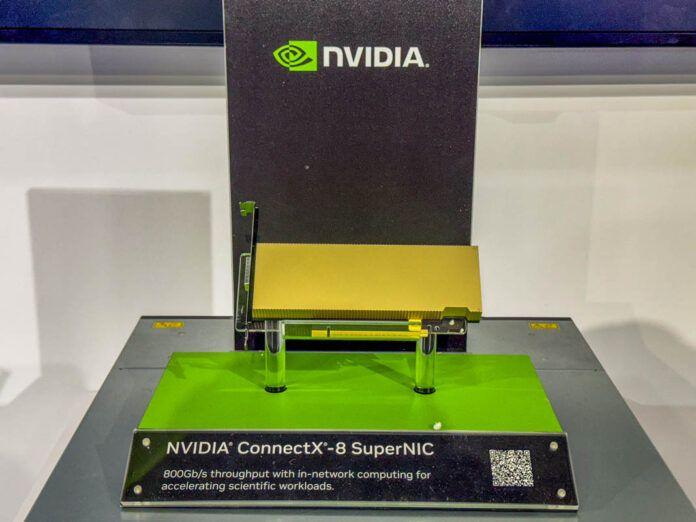

NVIDIA has unveiled the Connectx-8 Supernic, a new card that has a capacity of 800 Gbit / s, doubling the 400 Gbit / s recorded for its predecessor.

The design of this card is a difference in relation to conventional network interface (NIC) interface cards because it looks more like traditional GPUs, the connectx-8 apparently defined to focus on improving the air flow and the Cooling efficiency with its low profile design, rear plate and advanced advanced layout layout.

This device is also delivered with a large connector to the rear, which suggests the potential for multi-host cable connections, possibly linking additional processors or depending on the PCIE switch output.

Multi-host connections and flexibility

Connectx-8 Supernic is a single port card that offers a high bandwidth that requires advanced PCIe connectivity.

More specifically, it requires PCIE Gen6 x16 or two Gen5 x16 links to operate effectively, suggesting that the card performance exceeds what only one CPU can manage. This ability is aligned with the need for robust connectivity in NVIDIA grace platforms, where Connectx-8 serves as a critical component for auxiliary connections due to the limits of Grace processors.

By integrating these NICS, NVIDIA minimizes dependence on Broadcom products, improving the efficiency of the system and flexibility.

Unlike the ancient conceptions of NIC which generally have bulky thermal dissipators and a more utilitarian aesthetic, the Connectx-8 has an elegant rear plate and a global factor recalling modern GPUs.

The introduction of the Connectx-8 does not seem to be simply a question of aesthetics, because it suggests that Nvidia has a broader vision of IA infrastructure. By aligning its networking products with GPU type conceptions, the company is likely to rationalize its integration into data centers while offering essential high performance capacities for AI workloads.