- Future IA memory chips could require more energy than combined whole industrial zones

- 6 to memory in a GPU seems incredible until you see the draw

- HBM8 batteries are impressive in theory, but terrifying in practice for any business concerned with energy

The implacable will to broaden the processing power of AI is abandoning a new era for memory technology, but this has a cost that raises practical and environmental concerns, experts warned.

Research from the Korea Advanced Institute of Science & Technology (KAIST) and the Interconnection and Terabbyte Package Laboratory suggest by 2035, the AI accelerators equipped with 6 HBM TO could become a reality.

These developments, although technically impressive, also highlight the requirements of steep power and the increase in the complexity involved in the push of the limits of AI infrastructure.

The increase in GPU AI memory capacity provides enormous energy consumption

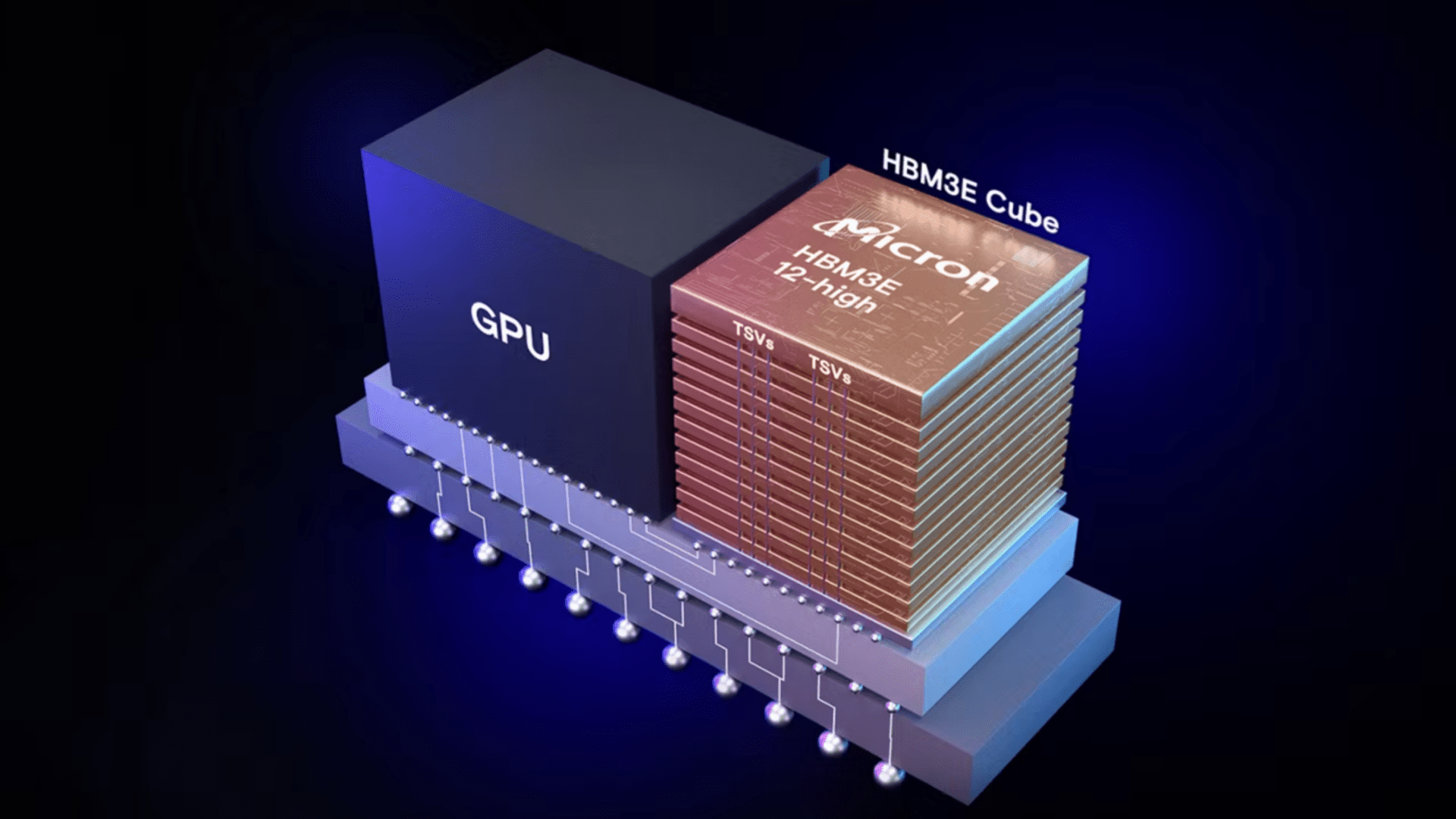

The roadmap reveals that the evolution of HBM4 to HBM8 will provide major gains in the bandwidth, memory stack and cooling techniques.

From 2026 with HBM4, the NVIDIA and AMD Instinct Plates-Forms will incorporate up to 432 GB of memory, with bandwidths reaching nearly 20 TB / s.

This type of memory uses liquid cooling methods directly on chip and personalized packaging to manage power densities around 75 to 80 W per battery.

HBM5, projected for 2029, doubles the entrance / exit tracks and moves towards cooling by immersion, with up to 80 GB per battery consuming 100 W.

However, power needs will continue to climb with HBM6, planned by 2032, which pushes the bandwidth at 8 TB / S and the battery capacity to 120 GB, each drawing up to 120 W.

These figures add up quickly when considering the complete GPU packages which should consume up to 5,920w per chip, assuming 16 HBM6 batteries in a system.

As HBM7 and HBM8 arrive, the figures extend over a previously unimaginable territory.

HBM7, expected around 2035, triple the bandwidth at 24 TB / s and allows up to 192 GB per battery. The architecture supports 32 piles of memory, pushing the total memory capacity beyond 6 TB, but the demand for power reaches 15,360 W per package.

The estimated energy consumption of 15,360 W marks a spectacular increase, which represents an increase of seven years in just nine years.

This means that a million of them in a data center would consume 15.36 GW, a figure that is roughly equivalent to all the production capacity of the United Kingdom Wind in 2024.

HBM8, projected for 2038, still extends the capacity and the bandwidth with 64 TB / s per battery and up to 240 GB of capacity, using 16,384 E / S and 32 GOPS.

It also has coaxial TSV, integrated cooling and double -sided interposers.

The growing requirements of the IA and the Grand Language model (LLM) have prompted researchers to introduce concepts such as HBF (wide -band flash) and IT focused on HBM.

These conceptions offer the integration of Nand Flash and LPDDR memory into the HBM battery, based on new cooling and interconnection methods, but their feasibility and their real efficiency remain to be proven.