- Copilot has access to GitHub private standards, seek researchers

- The benchmarks were public at some point, and Bing cache them

- Cache behavior is “acceptable” says Microsoft

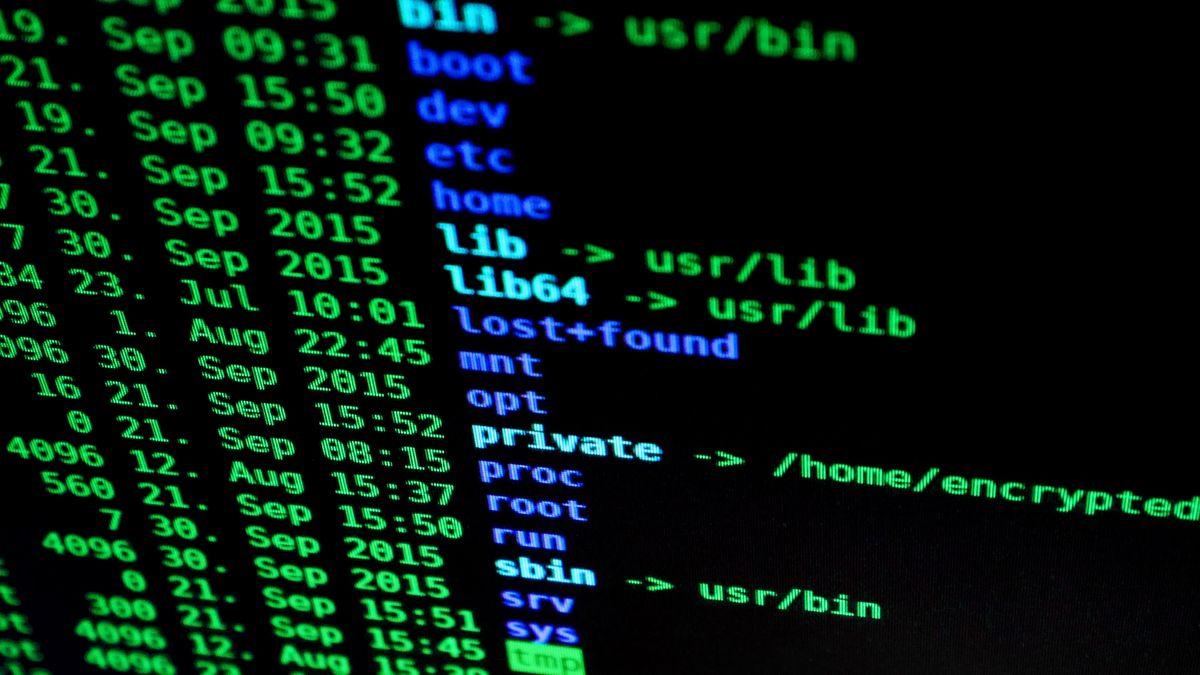

Thousands of private GitHub standards, some of which were contained by references and other secrets, are exposed via Microsoft Copilot, the virtual assistant of generative artificial intelligence (GENAI), have warned experts.

Lasso cybersecurity researchers reported their results to Microsoft but obtained a mixed response.

Lasso is a cybersecurity company focused on threats emerging from the use of new AI tools, and Copilot has been able to recover one of its own Github standards which should have been private and inaccessible on the wider internet. Indeed, navigation directly to Github returns an error “page not found”. However, at one point, the team has mistakenly left the public’s public for a short period – long enough for Microsoft’s bing search engine to undoubtedly. This allowed Copilot access to data, even if it should not have.

Serious implications

Lasso has also studied, compiling a list of tens of thousands of standards which were public at some point and put in private today, by finding more than 20,000 people who can still be accessible via Copilot, belonging to tens of thousands of organizations, including some of the largest players in the technological sector.

The implications of the results could be quite serious. Talk to TechcrunchLasso’s co-founder, Ophir Dror, said that he had used the flaw to recover a github that hosted a tool allowing them to create IA images “offensive and harmful” using Microsoft’s IA cloud service. Different corporate secrets could also be exhibited in this way, which prompted DROR to advise victims to turn or revoke their keys.

Microsoft would have told the company that the problem was “with low severity” and that the chattering behavior was “acceptable”. However, in December 2024, Microsoft no longer included links to the Bing cache in its search results. Copilot can always access the data.