- YouTube launched a deepfake detection tool to help creators identify AI-generated videos using their image without consent

- The tool works like Content ID, allowing verified creators to review flagged videos and request their removal.

- Initially limited to members of the YouTube Partner Program, the functionality may expand more widely in the future.

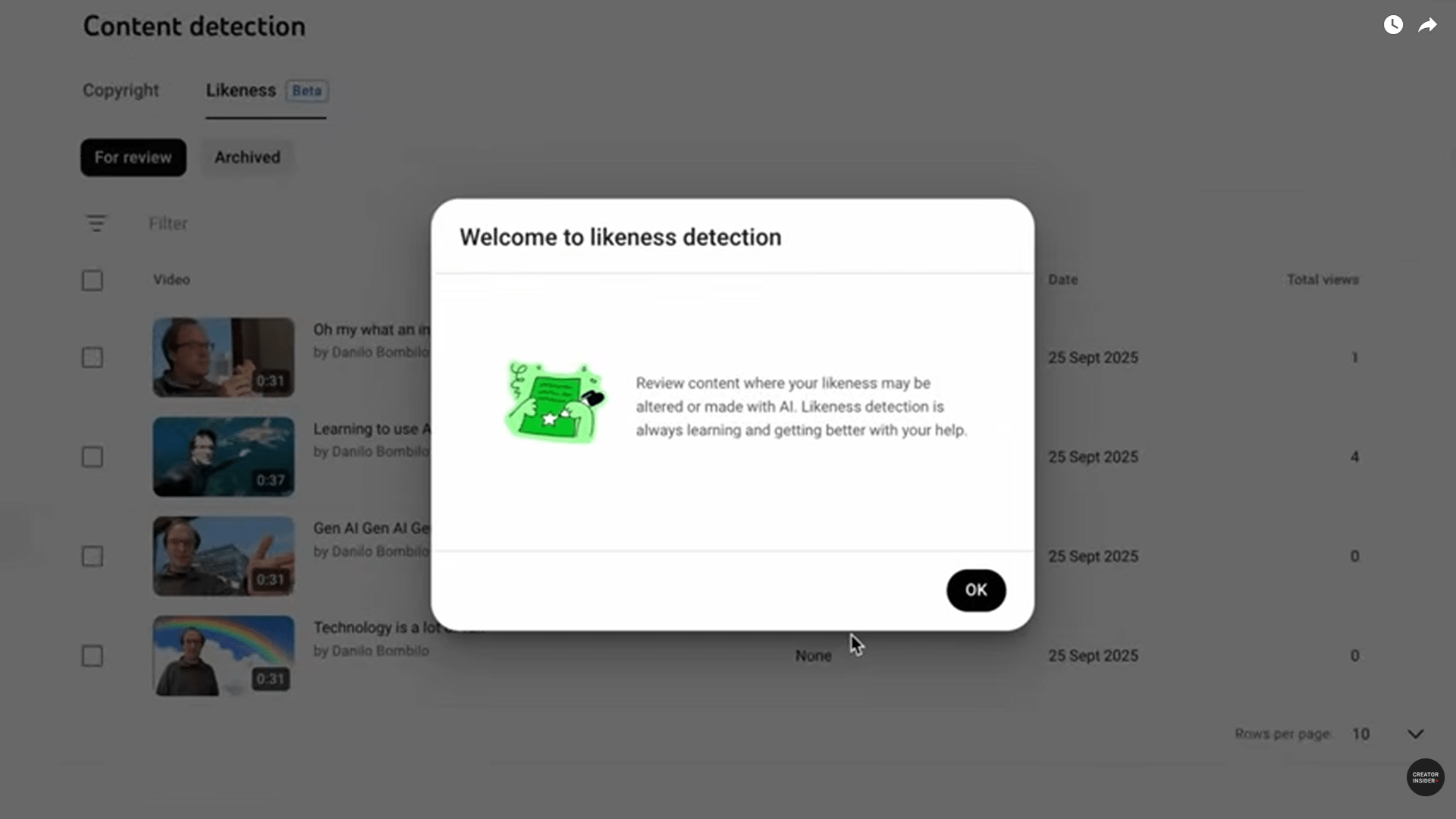

YouTube is starting to take illicit deepfakes more seriously, rolling out a new deepfake detection tool designed to help creators identify and remove videos with AI-generated versions of their image made without their permission.

YouTube has started emailing information to some creators, offering them the ability to scan uploaded videos for potential matches with their face or voice. Once a match is flagged, the creator can review it through a new content detection tab in YouTube Studio and decide whether to take action. They can simply report it, submit a takedown request under privacy rules, or file a full copyright complaint.

For now, the tool is only available to a limited group of users in YouTube’s partner program, although the service will likely expand to eventually become available to any monetized creator on the platform.

This is similar to how YouTube worked with Creative Artists Agency (CAA) in 2023 to give high-profile celebrity clients early access to prototype AI detection tools while providing feedback from some of the people most likely to be impersonated by AI.

Look on it

Creators must register by submitting a government-issued photo ID and a short video clip of themselves. This biometric proof helps the detection system recognize when it is really them. Once registered, they will start receiving alerts when potential matches are spotted. YouTube warns, however, that not all deepfakes will be detected, particularly if they are heavily manipulated or uploaded at low resolution.

The new system is very similar to the current Content ID tool. But while Content ID searches for reused audio and video clips to protect copyright holders, this new tool focuses on biometric mimicry. YouTube naturally thinks creators will appreciate being able to control their digital identity in a world where AI can stitch your face and voice over someone else’s words in seconds.

Face control

Still, for creators worried about their reputation, it’s a start. And for YouTube, this marks a significant shift in its approach to AI-generated content. Last year, the platform revised its privacy policies to allow ordinary users to request the removal of content imitating their voice or face.

It also introduced specific mechanisms for musicians and vocal performers to protect their unique voices from AI cloning or reuse. This new tool puts these protections directly in the hands of creators with verified channels – and hints at a broader ecosystem shift to come.

For viewers, the change is perhaps less visible, but no less significant. The rise of AI tools means that impersonation, misinformation and misleading edits are now easier than ever to produce. While detection tools won’t eliminate all synthetic content, they increase accountability: If a creator sees a fake version of themselves circulating, they now have the power to respond, which hopefully means viewers won’t fall for fraud.

This matters in an environment where trust is already frayed. From AI-generated Joe Rogan podcast clips to fraudulent celebrity endorsements selling crypto, deepfakes are becoming more convincing and harder to trace. For the average person, it can be almost impossible to tell if a clip is real.

YouTube isn’t the only one trying to solve the problem. Meta announced it would tag synthetic images on Facebook and Instagram, and TikTok introduced a tool that allows creators to voluntarily tag synthetic content. But YouTube’s approach is more direct when it comes to likenesses being used for malicious purposes.

The detection system is not without limitations. It relies heavily on pattern matching, which means that heavily edited or stylized content may not be flagged. It also requires creators to place a certain level of trust in YouTube, both to handle their biometric data responsibly and to act quickly when takedown requests are made.

However, it is better than doing nothing. And by modeling this feature on Content ID’s respected approach to rights protection, YouTube places importance on protecting people’s images, like any form of intellectual property, respecting that a face and a voice are assets in a digital world and must be authentic to retain their value.

Follow TechRadar on Google News And add us as your favorite source to get our news, reviews and expert opinions in your feeds. Make sure to click the Follow button!

And of course you can too follow TechRadar on TikTok for news, reviews, unboxings in video form and receive regular updates from us on WhatsApp Also.